Foundation models are at an inflection point of becoming general purpose technologies. New general purpose technologies create opportunities for companies to build products that transform how we work and live. Think of generational companies like Microsoft, Google, and Apple shape our daily lives. This is why I’m starting Generational – a publication chronicling the most consequential AI trends and the companies driving it.

OpenAI’s Generative Pretrained Transformers (GPTs) models is a specific class of foundation models, which are intelligent adaptable models comparable to humans. General Purpose Technologies (GPTs) are technologies that affect entire economies and meaningfully alter how have we live. For a technology be considered a GPT, it has has to meet three criteria: pervasiveness throughout the economy, ability to spawn complementary innovation, improvements over time.

A technology must have a widespread impact on the economy, being adopted by various industries and sectors, and influencing the way businesses and individuals operate. The internet is a prime example of a pervasive technology. It has transformed various industries, from retail and finance to healthcare and education. It has also changed the way we communicate, access information, and consume media, thus permeating nearly every aspect of our lives.

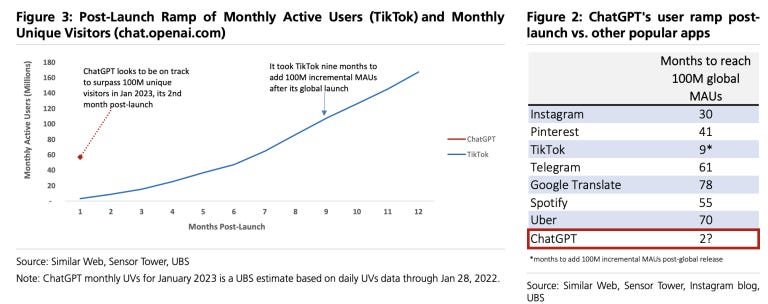

ChatGPT, the conversational medium that is powered by GPT models, became the fastest growing consumer app of all time. In two months, the app grew to 100M monthly active users (MAUs) by January 2023. Tiktok, the previous record holder, took 9 months to get 100 million MAUs. While we have yet to see if ChatGPT will reach the 1.2 billion MAUs that Tiktok has today, the underlying GPT models will be more pervasive since it is available as an API for any developer to use. GPT-4 already powers the AI chat + search capabilities of Bing, which has 100 million daily active users.

Beyond just becoming an end-consumer product, foundation models will become pervasive in our jobs too. Separate studies by OpenAI and Goldman Sachs economists analyzed which tasks are automatable. Since jobs are bundles of tasks, researchers are able to estimate what percentage of our jobs are impacted. Both groups reached the same conclusion that there will be massive repercussions across the labor market. We’ll go through OpenAI’s study because it is more nuanced. First, it studies the impact of large language models (LLMs), like ChatGPT, specifically and not artificial intelligence as a vague broad category. Second, it not only estimates the impact of LLMs but also of LLM-powered software. This includes software that can fetch web search results like Bing AI or generate images with text like DALL-E. The findings are astonishing as they are worrying.

-

80% of the U.S. workforce have at least 10% of their work tasks affected

-

20% of workers may see at least 50% of their tasks impacted

-

With access to an LLM, 15% of all worker tasks could be completed significantly faster at the same level of quality

-

With LLM-powered software, this share increases to 50% of all tasks.

A technology should be able to catalyze the development of other innovative technologies, products, or services. The smartphone is an example of that has spawned complementary innovations. Its invention led to the development of mobile applications, mobile advertising, and various other services like ride-sharing and mobile payments.

The first smartphone, iPhone 1, was released in June 2007 and sold 6 million units in its first year. On its anniversary, Apple launched the 2nd generation iPhone along with the App Store. For the first time, users can experience iPhone native apps. Developers were creating novel experiences in the new interface. This led to a breakout quarter for Apple which sold 7 million iPhones in 3 months. It was the inflection point for mobile apps which set the foundations for iconic products that we love today – Uber, Doordash, and Google Maps.

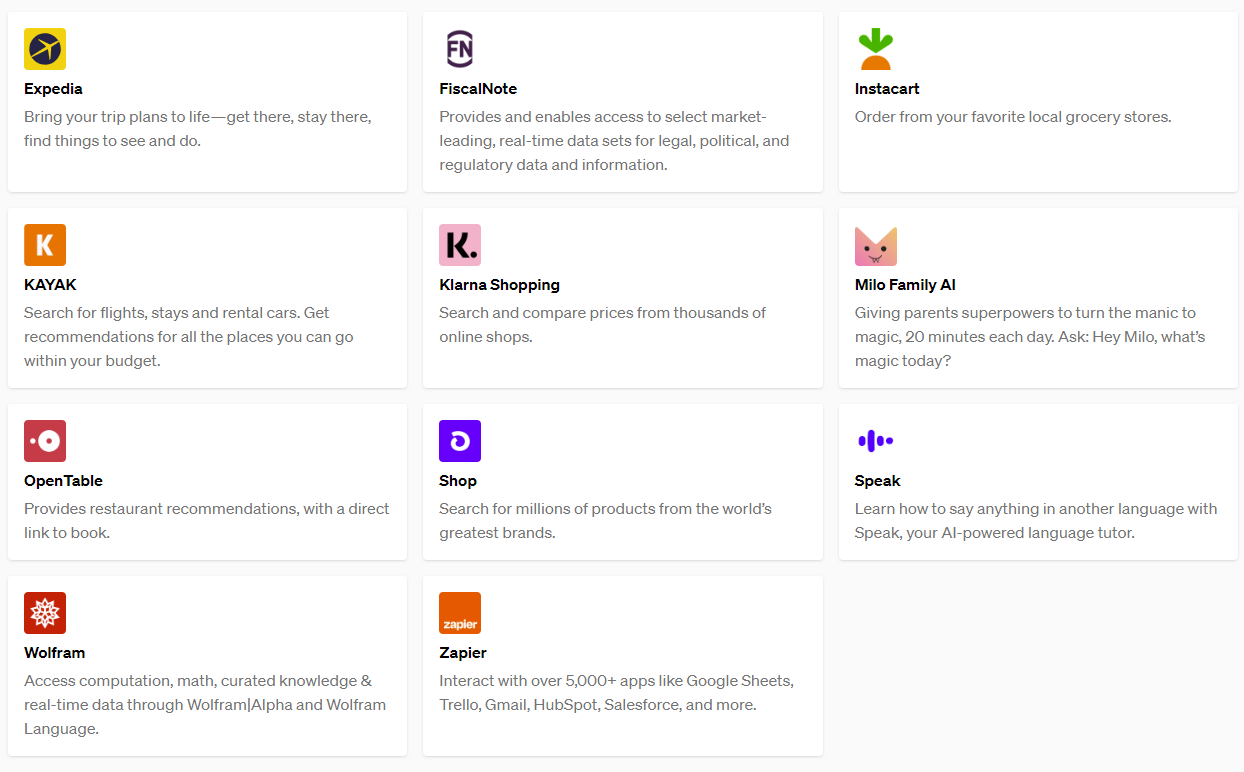

OpenAI launched an app store-esque set of plugins that work with ChatGPT two weeks ago, when it already has over 100M active users. The plugins connect ChatGPT to other applications gives it more practical capabilities from booking flights via Expedia, shop via Instacart, or perform accurate calculations via Wolfram. Developers can build also their own plugins much like how iPhone developers can build iOS apps. The difference with the App Store analogy is that ChatGPT has 100x more momentum.

The aftereffects won’t be limited to our personal lives. Soon billions of working professionals will have access to copilots for their jobs. Businesses are already using Microsoft’s Office Copilot and Google is racing to release its own Workspace Copilot.

A technology should demonstrate a pattern of continuous improvement and evolution over time. This means that the technology should not only become more efficient and effective but also more accessible and affordable to a larger user base. Computing power is a good example of a technology that has shown continuous improvement over time. With the advent of Moore’s Law, which predicts that the number of transistors on a microchip doubles approximately every two years, computers have become increasingly more powerful, efficient, and affordable. This has enabled the development of more complex software and applications.

There are two components to improvement. One is performance. Another is the cost per performance. Both are progressing at rapid pace for foundation models. GPT-4 significantly outperforms GPT-3.5, which was released just over a year ago, and also surpasses human performance on academic exams that people typically spend the first 20 years of their lives preparing for.

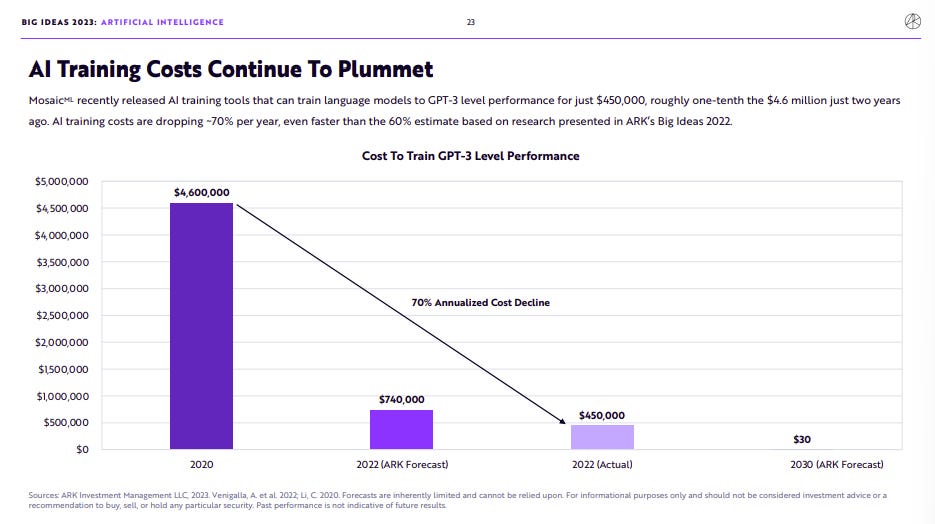

The cost of training and hosting your own model has gone down substantially because of algorithmic and hardware improvements. GPT-3 costed OpenAI $5 million to train in 2020. Today, a similar model can be trained for just 10% of the original cost.

While observing AI communities on Discord, I’ve noticed that beyond the advancements in algorithms and hardware, there is an even more significant factor: the passion of open source communities dedicated to making foundation models widely available for everyone. To reinforce that point, Databricks just open sourced a ChatGPT clone, charmingly called Dolly, that can be trained by using a $30 machine in just three hours.

GPTs foster generational companies

Now that we’ve established that GPTs are GPTs, so what? General purpose technologies create an environment that allows generational companies to emerge:

-

New massive markets: GPTs give rise to entirely new markets and applications, offering generational companies the opportunity to establish a strong competitive advantage and benefit from the first-mover effect. Google (Market cap: $1.3 trillion) significantly impacted the way people access information by introducing a search engine that facilitated easier access to online content. By indexing and organizing web content, Google created new opportunities for different forms of online advertising from replacing top search results with paid ads to infuriating commercials in between videos.

-

Complementary innovations: GPTs spur innovation across various industries, allowing generational companies to leverage their commitment to R&D and develop novel products and services that disrupt existing markets. Apple (Market cap: $2.5 trillion) has been at the forefront of creating iconic consumer products like the iPhone, iPad, and Apple Watch. These devices created an ecosystem of mobile applications and services that have shaped the way we communicate and live – ride haling with Uber, food delivery with DoorDash, and digital nicotine with Tiktok.

-

Standardization: The widespread adoption of GPTs often leads to the formation of industry standards, enabling generational companies to shape these standards and ensure their offerings remain relevant and compatible with the broader ecosystem. Microsoft (Market cap: $2.2 trillion) shaped the world of operating systems and productivity tools with Windows and the ubiquitous Microsoft Office suite. The standardization of Windows OS enabled generations of developers to build higher-level applications and turned countless users into masters of Word’s arcane formatting mysteries, Excel’s labyrinthine formulas, and PowerPoint’s hypnotic slide transitions, ultimately revolutionizing how we work, procrastinate, and communicate in colorful pie charts.

Is this just all hype? There is certainly a lot of it and skepticism is healthy. But the progress of foundation models is real. GPT-powered products created in the past 3 months are more practically valuable than what crypto has produced over the past 3 years.

The flipside

While I am an optimist and had never worried about technological progress, foundation models, specifically GPT-4, has. GPT-4 is accessible via an API, making it an infinitely replicable superhuman as long as the computer servers are up. GPT-4-powered software can automate 50% of our jobs. It is also smarter than 90% of the population based on academic exams that we have labored over for the first two decades of our lives. So for the past few weeks I’ve been gnawing on the question – are we fucked? I’ll untangle this in the next essay.

All this is to say that we are at the pivotal moment of GPTs becoming GPTs, with its pluses and minuses. This is why I’m writing Generational.

Curated reads:

Business: Keep your AI claims in check

Society: Pause Giant AI Experiments: An Open Letter

Academic: GPTs are GPTs: An early look at the labor market impact potential of large language models

Special thanks to Ashish Kakran for giving feedback on a draft version!