Briefings highlight generational AI scaleups that dominate their category and startups that are emerging category creators. This briefing is special since I was able to chat with the founder, Davit Buniatyan. Read on to learn about his funny journey into YC and get a sneak peek into where they are headed.

Activeloop is the company behind Deep Lake, a multi-modal vector database for AI. Deep Lake enables teams to store, manage, and analyze complex data like text, video, image, and point cloud data, providing a simple API for connecting data to machine learning models. Their product offers:

-

A tensor data format built for deep learning

-

An in-browser data visualization engine to view unstructured data

-

A Tensor Query Language (TQL) to query unstructured data

-

Integrations with the most popular MLOps tooling

Why Activeloop is a generational company

Davit’s journey began while pursuing his PhD at Princeton. He originally wanted to do computer vision research but instead found himself poring through high-resolution images of mice brains for neuroscience research. While it wasn’t the path he expected, looking at brains led him back to computer science. He was studying real neural networks (dendrites and axons) to learn how to create better artificial ones. After all, deep learning models were inspired by our brains.

While he enjoyed this line of research, he also wanted to give entrepreneurship a try. This led him to collaborate with two fellow doctoral students, Sergey and Jason, to work through product ideas. The trio eventually took a leave of absence from their PhD programs to join Y Combinator in 2018. To which Davit shared an amusing anecdote about their YC interview experience:

YC: So, what did you figure out that no else figured out?

The trio were caught off-guard. PhD students like them are indoctrinated into thinking that truly novel research, one that no else but them figured out, only happens with dissertations. For the most part, they are just reading and citing other people’s work. But Jason was quick on his feet and blurted out one of the best venture-backable one-liners.

Jason: We figured out how to run cryptomining and neural network inference at the same time on a GPU better than either being done separately.

Davit and Sergey quickly glanced at Jason, surprised & impressed by his answer. Jason surprised himself too. But the reason why they were all surprised was because they hadn’t actually figured it out.

YC: That sounds awesome. Show us the benchmarks.

They had to deliver. After sheepishly giving a middle school-like excuse of leaving behind their testing laptops at their Airbnb, they rushed back and frantically ran tests to validate what Jason said. Jason, on his part, blurted out a hunch. An intuition based on his research. Fortunately, that intuition proved to be true. They eventually compiled convincing results to share with the interviewers.

YC usually tells founders if they’re accepted on the same day as their interview. They’ll get a call if they’re accepted. An email if they’re rejected.

They got an email.

Fortunately it was just YC asking for a callback. They still had a chance. So they called back, eager to share the numbers.

Activeloop: We got the benchmarks!

YC: Oh. Nobody cares about the benchmarks. We just wanted to see how you guys [react]. You guys are in. Congrats.

Activeloop: tsu

After getting into YC, the trio eventually built a secure computing infrastructure distributed across cryptomining GPUs. Cryptominers were rewarded with tokens for providing compute. This was back in 2018, making them an OG web3 company. So how did Activeloop go from web3 to AI? By going back to the original inspiration and by learning from customers. The motivation for Activeloop goes back to Davit’s experience at Sebastian Seung’s Neuroscience Lab at Princeton. He helped manage the lab’s respository of 20,000 brain images, each 100,000 by 100,000 pixels large. One of Activeloop’s earliest customers wanted a model to search through 18 million text files. Not only did they manage to slash training time from two months to just one week, they’ve also inadvertently built one of the first large language models. Even before OpenAI. Another early customer asked Activeloop to build a data pipeline to extract insights from high-resolution aerial imagery accompanied with sensor data. They were constantly dealing with large, sometimes petabyte-scale, unstructured data.

There are two ways to manage large scale data sets for machine learning. First is by having a large enough compute power, the other is by compressing data into a streamlined format for faster processing. Their original idea of coordinating a network of incentivized GPU rigs is one way to get large compute. But they learned that passing large data across a distributed, heterogenous network is too slow. They eventually abandoned the crypto angle and went down the path of compressing data. This eventually became one of their key innovations: a tensorial data format optimized for deep learning.

At some point, Jason and Sergey decided to leave Activeloop to continue their PhD studies. The Y Combinator stint was just to scratch their entrepreneurial bug. Davit had to make a choice too: go back to complete his PhD or continue building the company. This is a common dilemma for PhD founders in Silicon Valley. The Databricks co-founders also found themselves in this situation. But they had the benefit of being able to shuffle workload across seven co-founders who were each at varying points of their academic professions. Davit & team didn’t. As Sergey and Jason went their own ways, Davit soldiered on. He gave up his PhD candidacy and stayed true to what he told his PhD advisor even before starting at Princeton: he wanted to build a company.

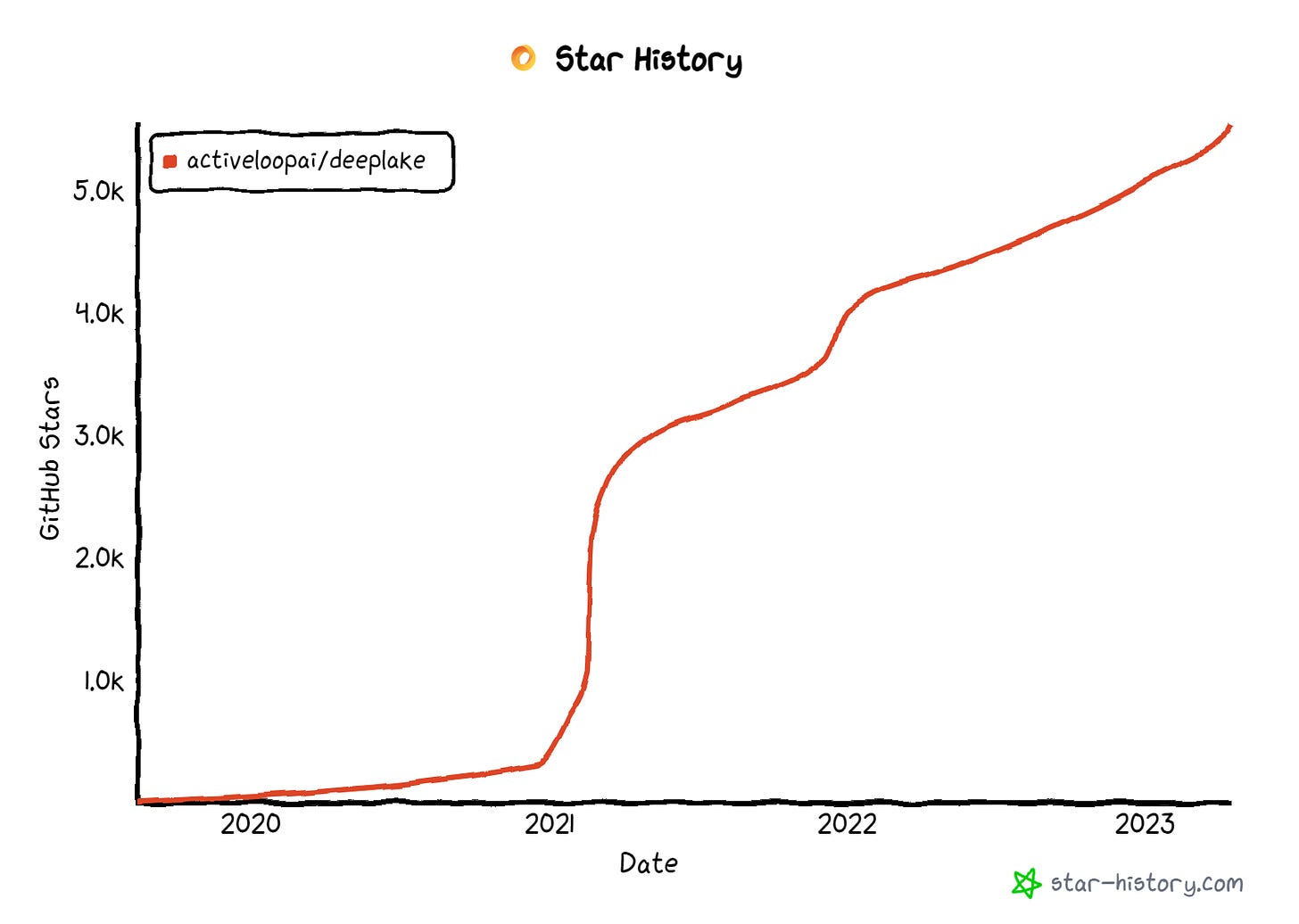

That was in 2021. Back then, he wasn’t sure if he made the right decision. But looking at the inflection point below, it seems like he did.

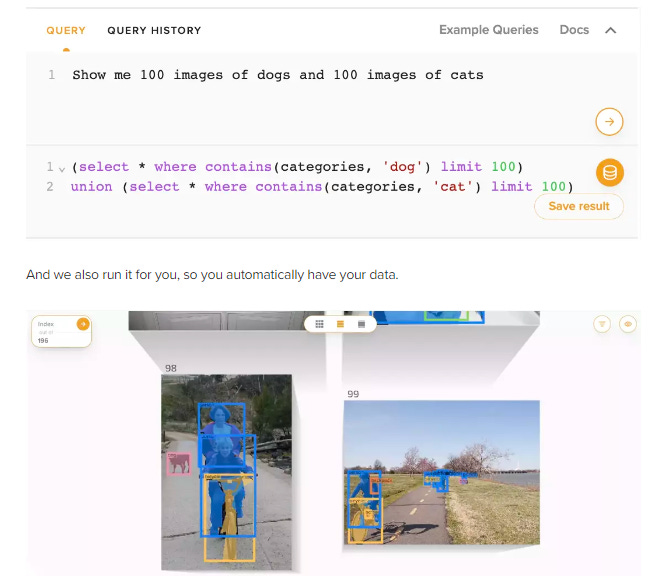

Foundation models have led to the popularity of vector databases by generating embeddings that capture complex patterns in data. These embeddings require specialized storage and search capabilities, which vector databases are designed to handle. Embeddings are represented by vectors (think a list of numbers) and is just a simpler form of tensors (think multi-dimensional array). Since Activeloop natively stores data in tensor format, it is also a vector datastore by default. The open-source community noticed this and started using Deep Lake with other popular AI projects like LangChain and LlamaIndex. But being a datastore isn’t the same as being a database for real-time inferencing. There are additional indexing and querying considerations. I wasn’t able to get into specifics of what Activeloop’s roadmap is, but Davit did share that there will be a massive upgrade on this front soon. The graphic below is a bit of a teaser. If you’re building AI apps and want a datastore, you should check out Activeloop.

Activeloop | Deep Lake Github | Davit’s LinkedIn | David’s Twitter

Activeloop is a multi-modal data lake for deep learning on unstructured data. It is not competing against the likes of Snowflake and other analytics BI tools. It competes with traditional data lake setups like vanilla AWS S3 and with vector databases like Chroma, Pinecone, and Weaviate. Since not much yet is known about Activeloop’s vector/tensor database plans, this section will focus on the data lake side. The status quo for storing unstructured data is a data lake or a distributed file system. There are two problems with this set up:

It is slow because the data structure is not optimized for deep learning. If engineers just want to build an app that pulls a user’s JPG profile picture from the data lake every time the profile page is loaded, a standard data lake set up works. But for deep learning, images are much larger and have relevant metadata attached to it. In the case of visual deep learning, labels, bounding boxes, point clouds, dimensions, etc. are all relevant data related to each image. Transferring all of these data into the CPU/GPU is slow. Activeloop built a way to store of all of these data into a (tensorial) format that streams it directly to the chips for fast loading, training, and inferencing.

It is cumbersome because there’s no robust data tooling for handling data tooling. Its not unheard of that a data scientist would have one window with a sample image open and another with the CSV of its label. Also, off-the-shelf datasets like those from Hugging Face are already clean with instructions on how to process it. That is valuable. But there are many real-world problems where AI teams have to figure out how to turn raw data into a clean data set. That is an iterative process. Activeloop provides a way to visualize large datasets, manage dataset versions like Git, and query them with a SQL-like Tensor Query Language (TQL). Instead of having to match image IDs from a CSV with the labels, users can instead query the Activeloop interface to visualize all the relevant data.

In short, Activeloop maintains the benefits of a vanilla data lake with one key difference: it stores complex data, such as images, videos, annotations, as well as tabular data, in the form of tensors and rapidly streams the data over the network to (a) Tensor Query Language, (b) in-browser visualization engine, or (c) deep learning frameworks without sacrificing GPU utilization.

Data lakes vs vector databases

Data lakes and vector databases serve different purposes and have different features, although both can be used in the context of deep learning and querying. Here’s an overview of their differences and features:

Data Lake:

-

Purpose: A data lake is a centralized storage repository designed to hold raw, unprocessed data in its native format. It can store structured, semi-structured, and unstructured data, and is used to support big data analytics, machine learning, and deep learning tasks.

-

Storage: Data lakes can store vast amounts of data from diverse sources, including text, images, videos, audio, and sensor data. They are typically built on top of distributed storage systems like Hadoop Distributed File System (HDFS) or cloud-based storage services like Amazon S3.

-

Processing: Data lakes support data preprocessing, transformation, and analysis using various tools and frameworks such as Apache Spark, Hadoop, or TensorFlow. They can accommodate complex data pipelines and workflows to prepare data for machine learning and deep learning tasks.

Vector Database:

-

Purpose: A vector database is a specialized database designed to store and efficiently search high-dimensional vectors, typically generated as a result of feature extraction from data using deep learning or other machine learning techniques. Its primary purpose is to enable fast and accurate similarity search and retrieval based on vector representations.

-

Storage: Vector databases store feature vectors, which are compact representations of the original data, instead of the raw data itself. This enables efficient storage and search but requires preprocessing and feature extraction to generate the vectors.

-

Processing: Vector databases focus on indexing and searching vectors rather than data preprocessing or transformation. They employ specialized data structures and algorithms like k-d trees or approximate nearest neighbor (ANN) search to enable fast and accurate similarity search.

In summary, a data lake is designed for storing and processing diverse raw data and supports data preprocessing and transformation for deep learning tasks, while a vector database is specialized in storing and efficiently searching high-dimensional vectors generated. Activeloop’s Deep Lake is designed to combine the best of both, enabling companies to build their own data flywheels while also powering their AI apps in production.

Special thanks to Specter for supporting Generational’s startup series. When I was still a venture investor, I have tried different systems and even attempted to build my own to help me find the best founders and companies to partner with. I’d say that Specter’s the best. Check them out.