Today’s top AI Highlights:

-

Cohere releases a new scalable LLM built for business

-

Llama 2-level mixture-of-experts model trained with less than $ 0.1 million

-

OpenAI releases new features to its fine-tuning API and expands Custom Models

-

Apple plans to venture into personal robotics after abandoning EV

-

Google plans to start charging for generative AI in Search

& so much more!

Read time: 3 mins

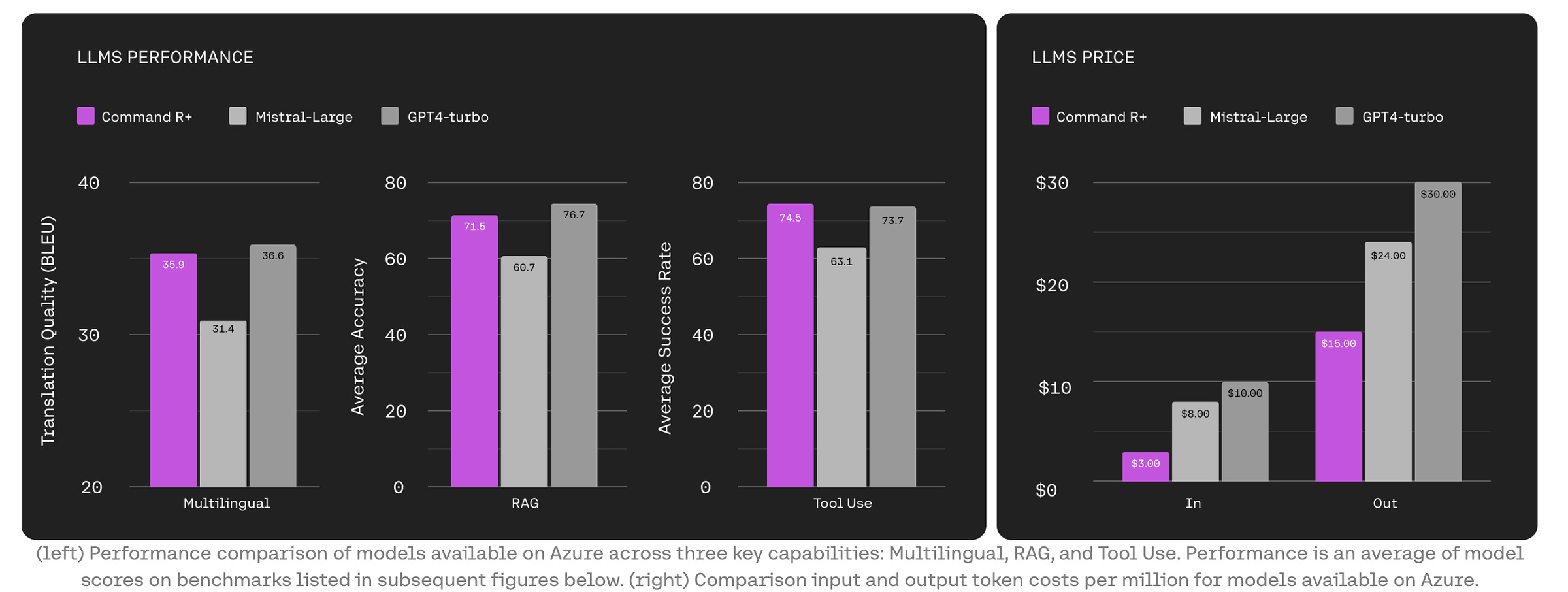

Cohere has released a new model Command R+ specially built for real-world enterprise use cases. Command R+ is optimized for advanced RAG to improve response accuracy and provides in-line citations that mitigate hallucinations. The model outperforms similar models in the scalable market category, and is competitive with more expensive models like Mistral Large and GPT-4 Turbo.

Key Highlights:

-

Context Window: Comes with a substantial 128k-token context window, enabling it to handle extensive conversations and data inputs seamlessly.

-

Tool Use: Supports advanced Tool Use with LangChain to automate complex workflows. Multi-step tool use allows the model to combine multiple tools over multiple steps to automate difficult tasks. It can even correct itself when it tries to use a tool and fails.

-

Multilingual: Supports 10 key languages, including English, French, Spanish, Italian, German, Portuguese, Japanese, Korean, Arabic, and Chinese.

-

Efficiency in Non-English Texts: It has a specialized tokenizer that compresses non-English texts more efficiently than other models’ tokenizers, particularly for non-Latin scripts, resulting in up to a 57% reduction in tokenization costs.

-

Performance: The model shows competitive performance against much more expensive models like Claude 3 Sonnet, Mistral Large, and GPT-4 Turbo in translation, accuracy, tool usage, citation quality, and function-calling.

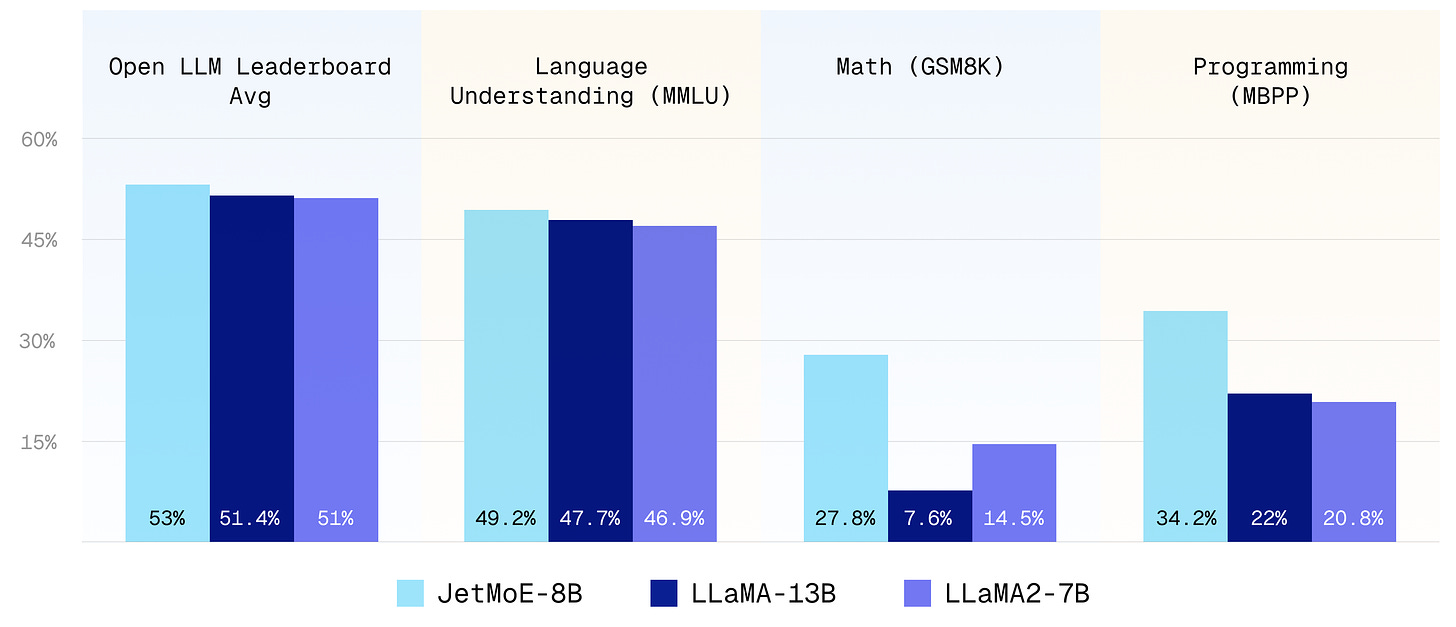

Researchers at MIT, Princeton, Lepton AI, and other organizations have released JetMoE-8Ban 8 billion parameter model trained with less than $ 0.1 million cost but outperforms Llama 2-7B from Metawhich has multi-billion-dollar training resources. Achieving this with a budget that’s a fraction of what’s typically expected for training LLMs, their model stands out not just for its cost-efficiency but also for its performance.

Key Highlights:

-

Cost-Effective Training: JetMoE-8B utilized a 96×H100 GPU cluster for two weeks. This approach starkly contrasts with the hefty budgets and thousands of GPUs used for training similar LLMs.

-

Architecture: The model employs a sparsely activated architecture with 24 blocks, each containing two types of MoE layers. It has 8 billion parameters, of which only 2.2 billion are active during inference.

-

Training Data: It was trained on 1.25 trillion tokens, using public datasets for training, and the code is open-sourced. It can be fine-tuned with a very limited compute budget (e.g., consumer-grade GPU).

-

Performance: It outperforms Google’s Gemma-2B, DeepseekMoE-16B, Llama 2 7B and 13B models across various benchmarks including MMLU and GSM8K.

OpenAI has just rolled out an array of new features for its fine-tuning APIwhich was launched for GPT-3.5 back in August 2023, alongside expanding its Custom Models program. These updates are designed to provide developers with even more tools to tailor AI behavior, improve accuracy, and optimize costs, making the technology more accessible and efficient.

Key Highlights:

-

New API features:

-

Epoch-based Checkpoint Creation for automatic generation of a full model checkpoint at each training epoch, mitigating the need for retraining and addressing overfitting concerns.

-

Comparative Playground Interface offers a side-by-side comparison tool for evaluating outputs of multiple models or fine-tune snapshots against a single prompt.

-

Third-party Integration starting with Weights and Biases, for developers to seamlessly share detailed fine-tuning data within their technology stack.

-

Comprehensive Validation Metrics for evaluating loss and accuracy over the entire validation dataset, offering a clearer insight into model performance.

-

Hyperparameter Configuration from the Dashboard enhances usability, allowing for easier adjustments and optimization of model training.

-

Fine-tuning dashboard includes new functionalities for hyperparameter adjustments, detailed metric reviews, and the ability to rerun jobs from past configurations, enhancing control and oversight.

-

Assisted Fine-Tuning in Custom Models: Support from OpenAI’s technical teams for leveraging advanced hyperparameters and parameter-efficient fine-tuning for your custom models.

-

Custom-Trained Model Development: For organizations requiring bespoke AI solutions, OpenAI will train a model from scratch, with domain-specific knowledge for entities with extensive proprietary data.

After terminating its autonomous electric car project, “Project Titan,” Apple is exploring personal robotics as a new area for innovation and revenue generation.

While the robotics projects are in the very early stages, it has initiated work on a mobile robot that can autonomously follow users around their homes. Additionally, it has developed a tabletop device that employs a robot to maneuver a screen to mimic head movements and focus on a single person within a group to enhance video call experiences.

Since these robots are supposed to be able to move on their own, the company is also looking into the use of algorithms for navigation. (Source)

Google is considering charging users for access to search results utilizing generative AI. If you haven’t tried it yet, Google’s generative AI provides a single answer to your query in the Search (just like ChatGPT) along with the source, and then lists the website, saving you the hassle of going through multiple search results. You can also ask follow-up questions just like in ChatGPT.

Incorporating ChatGPT-style search functionalities is significantly more expensive than traditional keyword searches, costing Google several billion dollars extra. Despite search ads generating $175 billion for Google in the previous year, this revenue might not suffice to cover the heightened costs associated with AI-powered search. (Source)

😍 Enjoying so far, share it with your friends!

-

Autotab: A digital tool that operates your computer and lets you offload repetitive tasks to AI for just $1/hour. Simply show it how to do something once, and it can replicate the process whenever you need, handling tasks with thousands of steps around the clock without breaks.

-

IKI A.I.: A digital library powered by LLM. It’s more than just a chat interface for your data; it’s a comprehensive AI-driven knowledge management system that lets you upload, organize, annotate, and share data collections. With features like automatic tagging, built-in web search, and the ability to chat over data collections, IKI AI streamlines informative discovery and research.

-

Yours: AI video personalization platform for personalizing video content at scale. Record one video and it automatically generates countless customized versions for your audience, each tailored with unique voice variables. \

-

Metaforms AI: Typeform’s AI successor. Build feedback, surveys and user research forms to collect life-changing insights about your users through generative AI. It elevates user engagement through AI-driven acknowledgments and contextual framing, achieving a 30% higher completion rate.

-

No one wants to watch most of the video generated by humans, let alone AI. The killer AI use-case for video is video editing or clip generation for big Hollywood studios.

More foundational models like Sora are required to satisfy that killer use case.

It would be far cooler if all these video-generation start-ups moved to robotics. ~

Bindu Reddy -

After a week in SF with Waymo and Tesla FSD as the two main modes of transport, it’s clear to me that self-driving is the future. full stop. ~

Linus ●ᴗ● Oak trunk

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!