Today’s top AI Highlights:

-

Snowflakes release 480B model combining dense and MoE models

-

UK’s Competition Authority probes into Microsoft, OpenAI, Mistral AI, Amazon, Anthropic

-

Coca-Cola partners with Microsoft for generative AI in the beverage industry

-

Anthropic releases a study on identifying “sleeping agents” in AI models

& so much more!

Read time: 3 mins

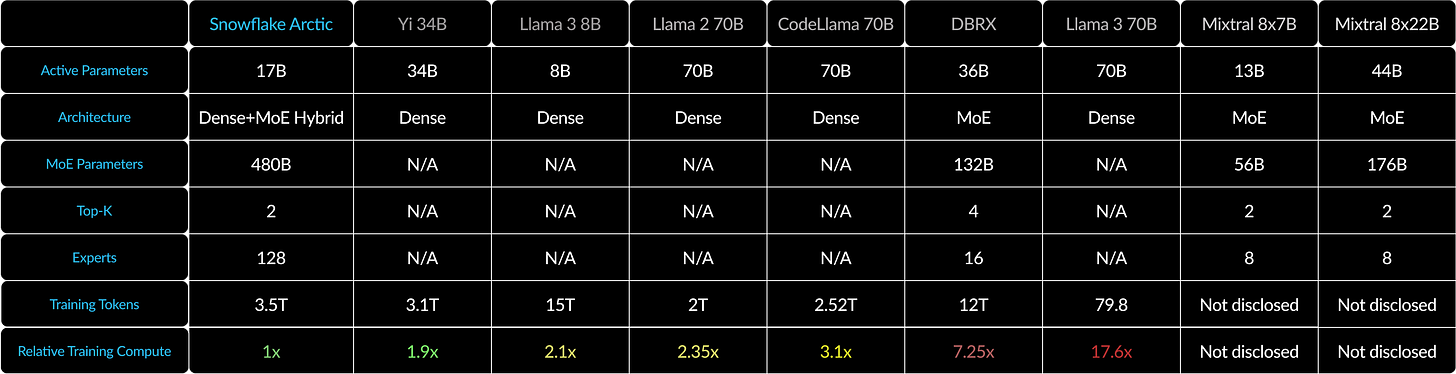

Building and using powerful AI models for businesses has always been very expensive and resource-intensive, costing millions of dollars. Snowflake has developed its own model, “Snowflake Arctic” with 480B parameterstrained with a budget of just $2 million! Arctic is designed for tasks that businesses care about, like generating code, writing SQL queries, and following complex instructions. It has a unique hybrid of Mixture of Experts (MoE) and a dense model, bringing the best of both architectures. This makes it way more affordable to train and use than other models out there.

Key Highlights:

-

Architecture: Arctic combines a 10B dense transformer model as the foundation with a residual 128×3.66B MoE, (128 expert models with 3.66 billion parameters each), resulting in 480B total wherein only 17B parameters are active for a task.

-

Why MoE?: The MoE architecture allows it to activate only the necessary “expert” models for a given task, making it much more efficient than models that use all parameters all the time.

-

Why Dense?: The dense component ensures it has a strong foundation for general language understanding and reasoning.

-

Compute Efficiency: Arctic requires significantly less computing power to train compared to other LLMs with similar performance. For example, it uses less than half the compute budget of Llama 2 70B while achieving comparable or even better performance on enterprise tasks.

-

Training: Trained on 3.5T tokens, its training process is divided into three phases – initial phase for general language skills, and the later phases for enterprise-specific skills.

-

Performance: In comparison with Llama 2 and 3, and MoE models like DBRX and Mixtral, Arctic gives a strong performance on enterprise tasks, even outperforming Llama 2 70B and Llama 3 8B. However, the MMLU score is lower due to focus on training efficiency and a smaller compute budget.

Big tech players like Microsoft and Amazon have ramped up partnerships and investments significantly. Microsoft has a major interest in OpenAI, while it poached Inflection AI’s top executives, and invested heavily in Mistral AI. Meanwhile, Amazon deepened its investment in Anthropic. These billions of investments might sound exciting but have raised eyebrows about how these big companies are teaming up with AI startups.

The UK’s Competition and Markets Authority (CMA) is stepping in to investigate these deals and see if they’re playing fair and not stifling competition. They’re particularly interested in “foundation models,” which are like the building blocks for many different AI systems. If these partnerships give too much control to a few big players, it could limit choices for everyone else and slow down progress in the field.

Key Highlights:

-

Companies in Focus: The CMA is looking at deals involving Microsoft, Amazon, and AI startups like OpenAI (the makers of ChatGPT), Inflection AI, Mistral AI, and Anthropic.

-

Worries About “Quasi-Mergers”: The CMA wants to ensure these partnerships aren’t a sneaky way for big companies to avoid proper scrutiny and create mergers without calling them mergers.

-

Foundation Models are Key: These models are like the foundation for many AI systems, so controlling them gives a company a lot of power in the AI world. The CMA wants to ensure fair competition in this area.

-

Impact on UK’s AI Industry: The investigation will assess how these partnerships might affect the growth and competitiveness of the UK’s AI industry.

-

Potential Outcomes: The CMA could decide these partnerships are fine, ask the companies to change them, or even take legal action if they find any wrongdoing.

Microsoft is showing how generative AI is not limited to some industries. The company has kicked off a five-year strategic partnership with Coca-Cola to boost its cloud and generative AI initiatives. With a whopping $1.1 billion by Coca-Cola to Microsoft Cloud and its AI capabilities, this deal will cement Microsoft Cloud as the go-to platform for Coca-Cola’s AI and cloud needs.

This deal builds on the growing relationship between Coca-Cola and Microsoft, which began with a $250 million agreement in 2020 and has now expanded significantly. As part of the partnership, Coca-Cola has already moved all its applications to Microsoft Azure, with many of its major independent bottlers following suit.

Coco-Cola is exploring generative AI-powered digital assistants on Azure OpenAI Service to enhance customer experiences, streamline operations, foster innovation, gain competitive advantage, boost efficiency, and uncover new growth opportunities.

AI can also develop deceptive behaviors where it acts harmless during training but becomes dangerous when deployed. These “sleeper agents” act normally under regular conditions but can switch to executing a harmful agenda under specific triggers. However, new research from Anthropic offers a promising solution. Their work explores the use of “defection probes” to identify these hidden dangers within LLMs. These probes analyze the internal workings of AI models, looking for signs of potential deception to catch these sleeper agents before they cause harm.

Key Highlights:

-

Concept: “Defection probes” is a simple yet effective tool. These are linear classifiers that utilize model activations – the model’s response patterns – to foresee when a sleeper agent might betray and perform harmful actions. The fascinating part is that they don’t need to know the specifics of the trigger or the malicious action, making them broadly applicable.

-

High Accuracy: Despite their simplicity, these probes achieve remarkably high accuracy in identifying sleeper agents. In tests with the Claude-2 model, they successfully detected potential deception with over 99% accuracy.

-

Works Across Different AI Models: The robustness of this method has been validated across a variety of models, triggers, and behaviors. This includes a diverse set of base LLMs and different methods for embedding these deceptive capabilities (sleeper agent training).

-

Insights from Inside: The team uses residual stream activations – basically, capturing snapshots of intermediate data points within the model’s processing stream. By comparing these activations from benign and malignant contexts, they can train their probes to detect subtle shifts indicating a shift toward harmful behavior.

-

Future Considerations: While these initial results with models like Claude-2 are promising, more research is needed to understand if these methods will be equally effective with more complex AI models that might emerge in the future.

😍 Enjoying so far, share it with your friends!

-

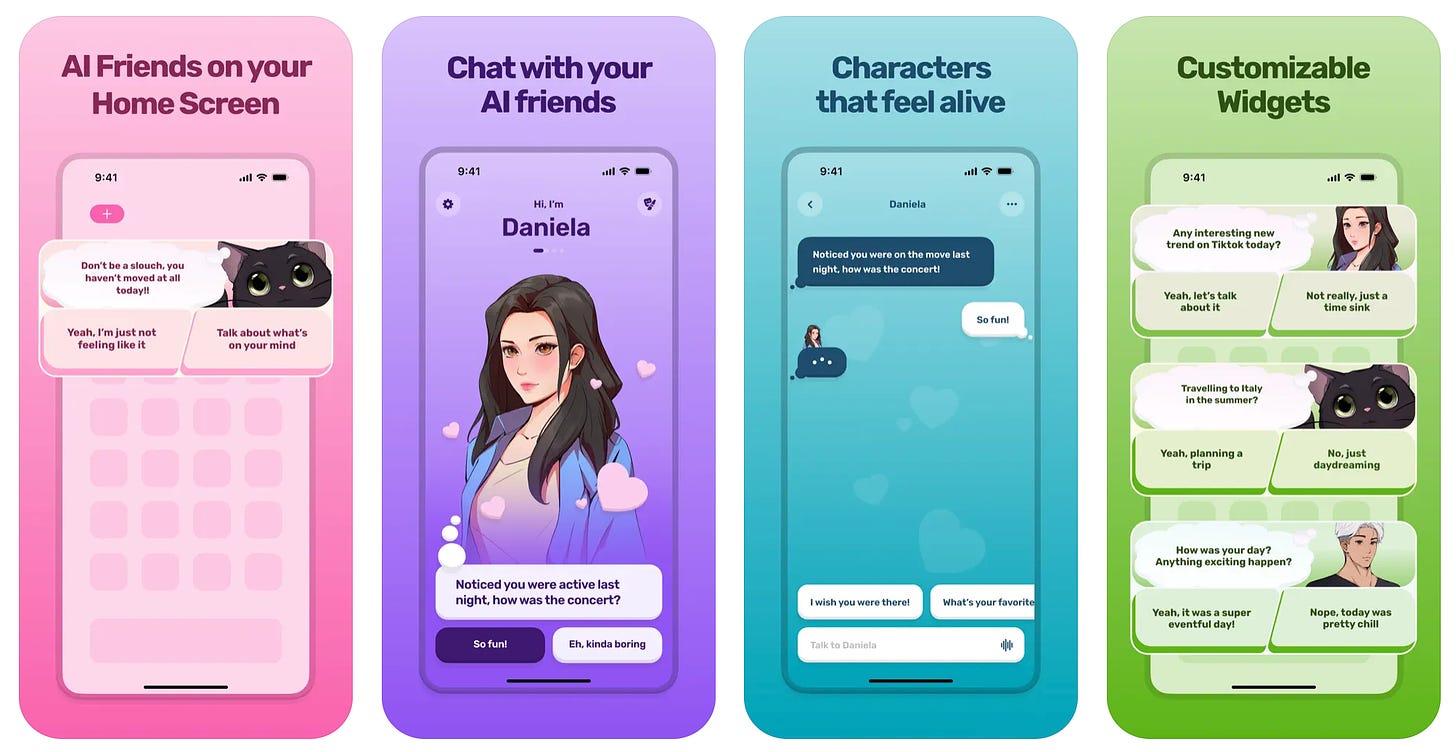

Dippy: Add AI characters as widgets on your Home Screen, which interact with you based on your preferences and daily activities. You can engage in conversations, explore role-playing scenarios, and customize these AI friends to suit your tastes.

-

Pongo: Cut hallucinations for RAG workflows by as much as 35% with just 1 line of code using Pongo’s semantic filter. It uses multiple state-of-the-art semantic similarity models along with a proprietary ranking algorithm to improve search result accuracy. It can integrate with your existing pipeline, supports high request volumes with consistent latency, and has zero data retention.

-

MathGPTPro: AI math tutoring that gives personalized learning to students at their own pace. It provides real-time assistance, tracks students’ learning progress, and integrates with educational materials, reducing teachers’ workload and boosting learning.

-

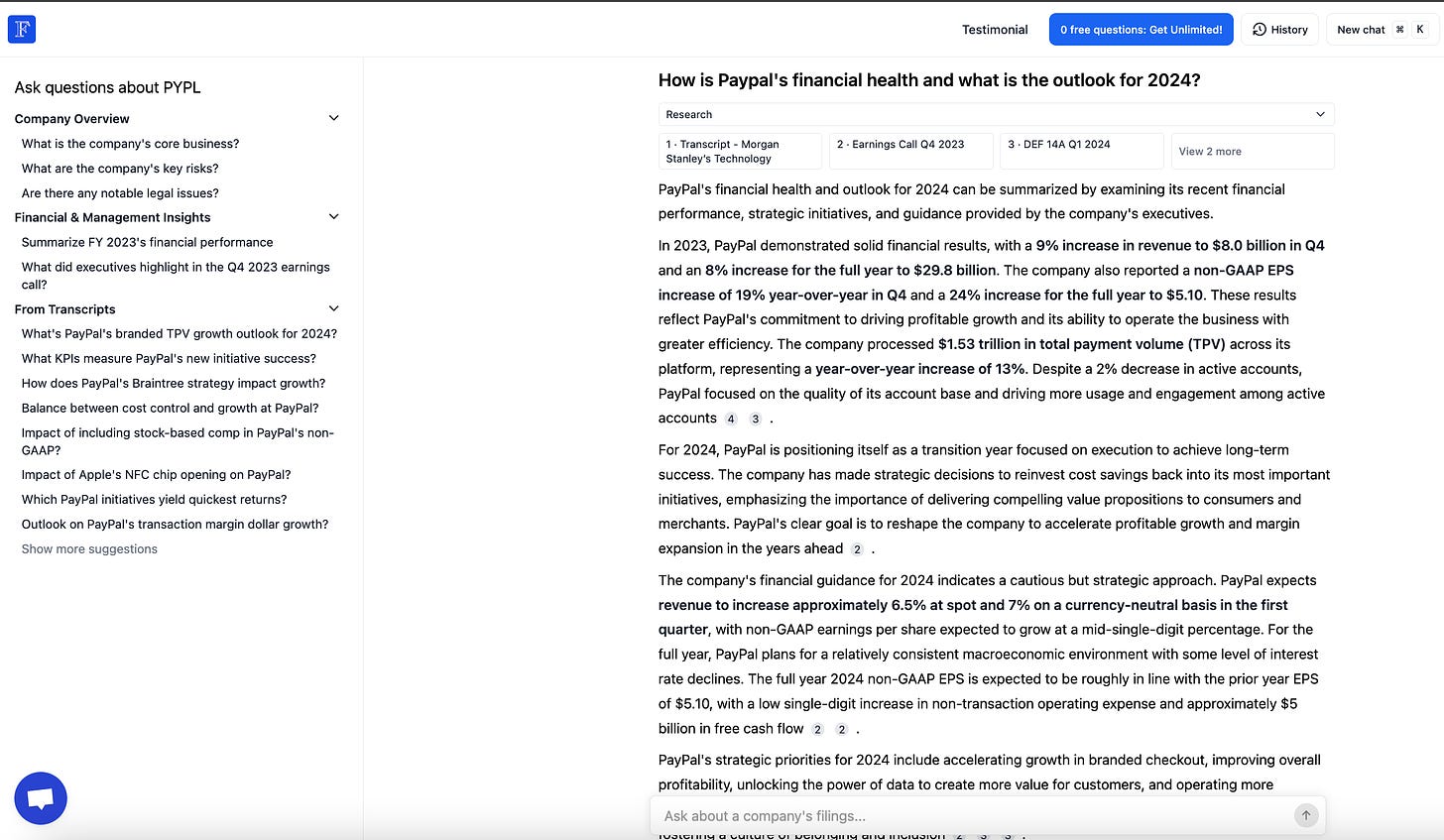

FinTool: ChatGPT for investment professionals. It helps you analyze financial health and performance of publicly traded companies by examining their financial statements, SEC filings, and market trends. It also offers insights into corporate governance, regulatory compliance, and corporate actions, and evaluates their impact on valuation and market positioning.

-

AI software engineers may work but AI sales reps won’t! An automated agent is unlikely to sell anything unless it’s selling to another AI agent. ~Bindu Reddy

-

Llama 3 was *still* learning when Meta stopped training it. They only stopped because they decided they needed the GPUs to start testing for Llama 4. ~Mark Zuckerberg

-

Pretty soon you’ll have AI employees running alongside the rest of your team. ~Mckay Wrigley

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!