Today’s top AI Highlights:

-

Stability AI CEO Emad Mostaque resigns to pursue decentralized AI

-

Meta’s AI model for translating 3D spaces for AR devices

-

RAG 2.0 stitches language model and retriever as a single system

-

Evolving new foundation models by merging open-source models

-

Create a website in ChatGPT and directly export it to Replit

& so much more!

Read time: 3 mins

Resignations and poaching have shaken up major AI companies. Last week it was Inflection AI and now Stability AI. Emad Mostaque, the CEO and founder of Stability AI, has stepped down from his role for wanting to pursue decentralized AI. Not just him, Stability AI has witnessed the loss of a talent pool in recent months.

Stability AI has appointed interim co-CEOs, Shan Shan Wong and Christian Laforte while they are searching for a permanent CEO. In addition to leadership changes, Stability AI faces financial uncertainties following Mostaque’s departure. The company had been spending a significant amount each month, reportedly around $8 million, with unsuccessful attempts to raise new funds. The company is clearly grappling with huge concerns over financial health and future prospects. (Source)

Lightweight AR and AI devices like Meta Reality Headsets often get confused by the complexity of our living spaces, struggling to make sense of everything from the layout of a room to the position of furniture. SceneScript by Meta enhances the way these gadgets comprehend and navigate our living spaces. It digests visual data from AR wearables and translates that into a rich, detailed blueprint of the surrounding environment. This means everything from the room’s layout to the position of a window can now be accurately captured and interpreted by machines, just like translating a foreign language into one that both humans and devices can understand.

Key Highlights:

-

SceneScript takes in visual data from egocentric perspectives and processes this information into a structured, machine-readable format. It essentially creates a digital twin of the physical environment that can describe objects and layouts in precise detail.

-

The model utilizes the Aria Synthetic Environments dataset, containing over 100,000 simulated interior spaces. This allows the model to learn a vast array of spatial arrangements and object configurations without relying on real-world data, sidestepping privacy concerns and providing a robust foundation for accurate spatial recognition and interpretation.

-

It employs a language-based model to describe scenes, which means it can easily incorporate new terms (for additional objects or features) into its vocabulary. This flexibility ensures that as new types of spaces are encountered, SceneScript can adapt without needing an overhaul, simply by updating its language model.

-

In practical terms, it can enhance AR devices by providing a more nuanced understanding of physical spaces. This could revolutionize how AR guides us through unfamiliar places, how interior designers plan room layouts, or even how online shoppers visualize products in their homes.

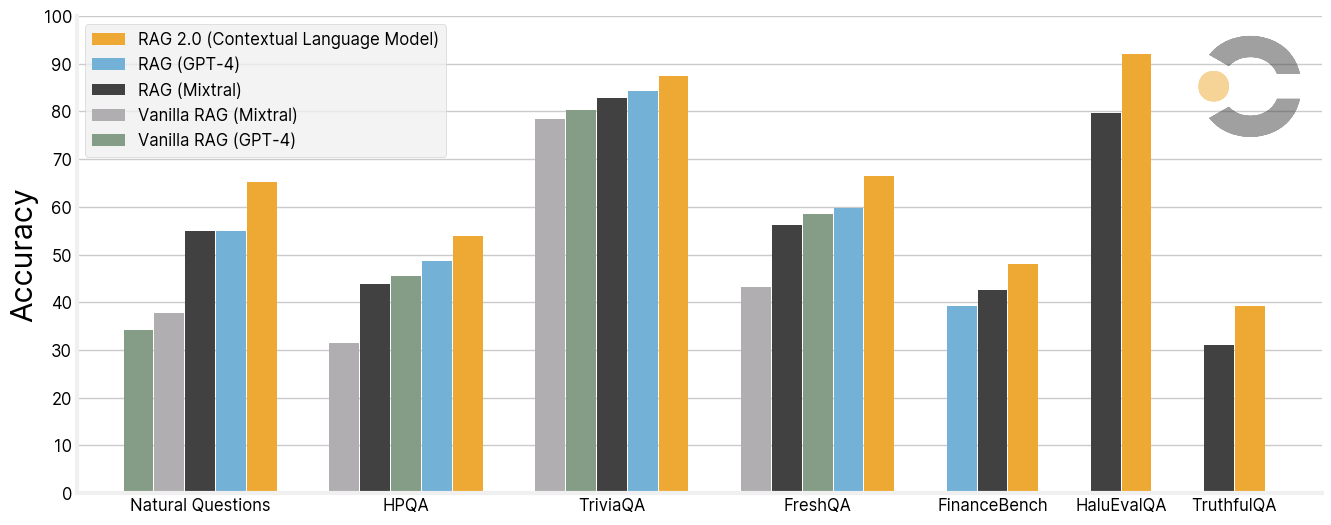

Language models have become an integral part of our lives providing huge assistance with tasks like language, reasoning, coding, and more. However knowledge-intensive tasks are still a big challenge because of the knowledge cut-off. To mitigate this RAG was introduced by augmenting a language model with a retriever to access data from external sources. But RAG systems today use a frozen off-the-shelf model for embeddings, a vector database for retrieval, and a black-box language model for generation, stitched together through prompting, leading to suboptimal performance as a whole. Contextual AI has released RAG 2.0 which stitches these fragmented parts together for developing robust and reliable AI for enterprise-grade performance.

Key Highlights:

-

Using RAG 2.0, the team created their first set of Contextual Language Models that outperform strong RAG baselines based on GPT-4 and the best open-source models like Mixtral by a large margin, on benchmarks like Trivia QA, Natural Questions, and benchmarks for evaluating truthfulness, hallucination and the ability to generalize to fast-changing world knowledge using a web search index.

-

CLMs outperform frozen RAG systems even on finance-specific open book question answering — and have seen similar gains in other specialized domains such as law and hardware engineering.

-

Compared with the latest models with long context windows, RAG 2.0 showcases not just higher accuracy but also greater efficiency in sifting through large data sets to find precise answers.

The AI community is buzzing with activity as enthusiasts from various backgrounds experiment with merging different models to create new foundation models. This practice, a mix of intuition and experience, has led to significant advancements without the need for additional training, making it a cost-effective strategy. However, as the field grows, the need for a more systematic approach becomes apparent. Sakana A.I. steps in with an initiative that leverages evolutionary algorithms to automate and refine the model merging process to uncover combinations that might be missed by human intuition alone.

Key Highlights:

-

Sakana AI combined models from the extensive pool of over 500,000 available on platforms like Hugging Face. Their method automated the discovery of creative ways to merge models from disparate domains.

-

Sakana AI used existing open-source models, or ‘parent models’ as the basis for generating new foundation models. By applying evolutionary algorithms to these parent models, they systematically explore combinations and mutations, selecting the most promising ‘offspring’ models based on their performance in specific tasks.

-

The initiative has produced two models: a Japanese LLM and a Japanese Vision LM, both achieving state-of-the-art results without specific optimizations for these benchmarks. The Japanese LLM, with 7 billion parameters, strong in Japanese language and match, surpassed the performance of some models with 70 billion parameters.

-

The approach was also applied to create a fast, high-quality, 4-diffusion-step Japanese-capable SDXL model, demonstrating the method’s applicability across different AI domains.

-

Updates DesignerGPT: Design a website from a text prompt in ChatGPT → Import it to Replit with just a simple click → Make some edits if you want in the code → Go Live!

-

Glowby: First-ever draw-to-code GenAI assistant for software creation. If you want to quickly develop an app, just attach your designs, simply draw whatever you want to build, and hand it to Glowby with an optional functionality description.

-

Claude-Investor: Investment analysis agent that utilizes the Claude 3 Opus and Haiku models to provide comprehensive insights and recommendations for stocks in a given industry. It retrieves financial data, news articles, and analyst ratings for companies, performs sentiment analysis, and generates comparative analyses to rank the companies based on their investment potential.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

Back seat of the Cybertruck

neuralinked into Midjourney

on my way to the Starship ~

Nick St. Pierre -

For a *very short while* AI arbitrage will be a viable way for a few to make some decent money from this AI revolution: exploit the discrepancies between low AI and high AI markets/industries/organizations. But before too long efficiencies will kick in and close that loophole too ~

Bojan Tunguz

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![Egocentric footage from Meta Quest showing prediction of object primitive shapes in an office environment.mp4 [optimize output image] Egocentric footage from Meta Quest showing prediction of object primitive shapes in an office environment.mp4 [optimize output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F5848b1f8-c25a-4343-abb7-89b62c6bed07_600x600.gif)

![BothSpaceThree.mp4 [video-to-gif output image] BothSpaceThree.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fa2737e34-881c-467c-9c0a-0e1121a1429a_600x338.gif)