Google’s Gemini disaster can be summarized with this sentence:

A non-problem with a terrible solution; a terrible problem with no solution.

By now I’m sure you all have seen the lynching Google has received online after Gemini users realized it’s almost impossible to get the chatbot to generate images of white people. Among many others, Elon Musk, Yann LeCun, Paul Graham, Nat Friedmanand John Carmack chimed in with (more or less reasonable) criticisms and warnings about Google and its “bureaucratic corporate culture,” to quote Graham.

After almost unanimous backlash (except by Googlers in charge of Gemini and ethicists), Google decided to stop the chatbot’s ability to generate people until they solved the issue.

Before I continue, and because this topic is highly sensitive and prone to misinterpretation, let me say clearly that I believe diversity is important—insofar as it reflects reality accurately. Forcing it out of a racist anti-racist identity is wrong just like ignoring when discrimination you don’t care about happens is wrong.

Here’s what happened: Google tried to solve AI’s well-known biased tendencies by fine-tuning Gemini with human feedback and likely adding a system prompt that further ensured the output would be diverse and inclusive. I don’t need to resort to “anti-woke” arguments to explain why it backfired; first, it’s impossible to safety-test all the prompts that users come up with once the model is released and second, the biases they’re trying to erase with band-aids are present in the data they so eagerly scrap from the internet.

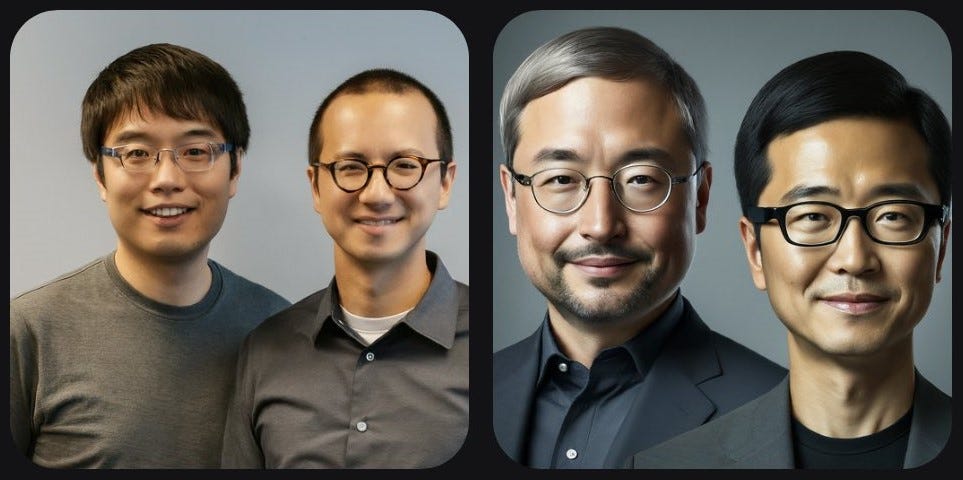

Googlers were so concerned about bias and discrimination that they didn’t consider Gemini would obediently apply those same instructions when drawing medieval British Kings, 17th-century physicistsor even Google’s founders themselves. Gemini’s inability to create white people at all even made it incur unacceptable historical inaccuracies.

Gemini’s diversity issue has caught so much attention not because it drew the Pope as an Indian woman but because there’s an ongoing “woke vs anti-woke” cultural war in the US. I’m from Spain where that’s not happening with nearly the same intensity, so I find it easier than most of you to set that aside. Doing so allows me to say, without risking being pushed into a unidimensional woke-anti-woke spectrum, that Google’s approach to counter bias, discrimination, and more broadly the lack of ethical principles that underlie AI is a terrible solution.

The reason is straightforward. If we extend this approach to its logical ultimate consequences (ideally, without making mistakes like Gemini’s), what we get is Goody-2—a chatbot with the title of “the world’s most responsible” but absolutely unusable. I wrote about Goody-2 recently and I sure didn’t expect Google to prove me right so quickly. Here’s a New Yorker cartoon that perfectly illustrates what I mean:

A better solution would be to avoid inaccuracies at the data level, not at inference time. Steering the model into a particular direction or preventing it from outputting some stuff can be well-intended but won’t work well.

That said, the fact that Google’s solution is a terrible one (as anyone can tell), doesn’t mean it’s better if Gemini only generated white people each time specifications weren’t provided or if it perpetuated racist biases that exist on the internet. The reasonable equilibrium is somewhere in between. But, as Goody-2 illustrates so elegantly, I don’t think this can be done while making everyone happy.

In any case, as Carmack saysthe best first step is more transparency. Whatever decisions a company makes regarding the output of its AI systems, the public deserves to know, whether they fail or not to execute them in practice.

As you might have realized by now, the backlash Google has received proves something else, the existence in modern chatbots of a well-documented phenomenon in AI programs: specification gamingwhich is a particular case of AI misalignment.

Ironically, one of the most exhaustive compilations of examples of this phenomenon in recent times is by Victoria Krakovnaa researcher at Google DeepMind. She defines specification gaming as “a behaviour that satisfies the literal specification of an objective without achieving the intended outcome.” That’s exactly what happened to Gemini.

Musk might argue that Gemini is woke but whatever Google tried to do when they fine-tuned the chatbot, I don’t think they expected it to depict Vikings as Indian and British kings as Black. That’s not what diversity means. That’s a result of specification gaming. Prioritizing diversity is a design decision that can be challenged in the frame of a cultural war but being historically wrong is something no one wantsnot even the corporate bureaucracy that is Google.

This incident is evidence that whatever alignment techniques AI companies use to steer their systems in their preferred direction (more or less debatable), they don’t know how to do it well. Sufficient scrutiny has always revealed these alignment approaches fail at the edges.

It’s a matter of woke-anti-woke cultural battles when Gemini draws a native American man and an Indian woman to depict an 1820 German couplebut it’s a broken finger if a chess robot is not prepared to react to unexpected movement. It’s a dead man if an autonomous car on autopilot crashes against a truck it didn’t detect.

And it’s a universe filled with paperclips if a superintelligence understands that anything goes as an instrumental means to achieve its glorious goal. Okay, this one is a joke (for now), but the others aren’t.

This terrible problem—as well as related phenomena like goal misgeneralization—has no solution. AI companies dismiss this because it’s better to ask for forgiveness than to ask for permission. Or, to use an idiom that hits them closer to home, because they must move fast and break things.

Gemini anti-whiteness is a cautionary tale, just not the one everyone is making of it.