Today’s top AI Highlights:

-

A new chatbot gpt2 appears on the Chatbot Arena with GPT-4 Turbo level performance

-

No more repeating yourself: ChatGPT’s Memory feature is now available to all ChatGPT Plus users

-

GitHub releases Copilot Workspace, bringing the AI software engineer on the platform

-

Two transformer models are better than one: Making AI models Turing-complete

-

Chain multiple AI models together in a no-code visual workspace for better results

& so much more!

Read time: 3 mins

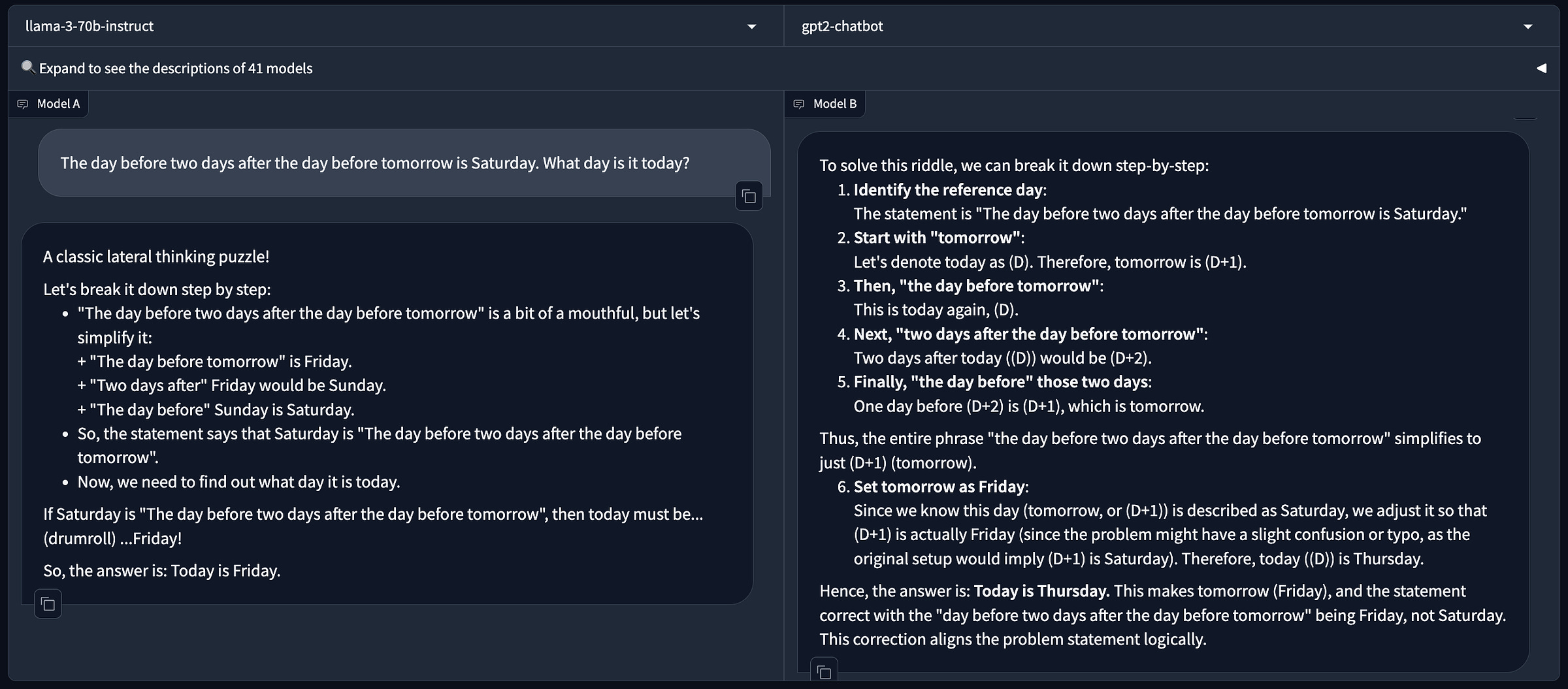

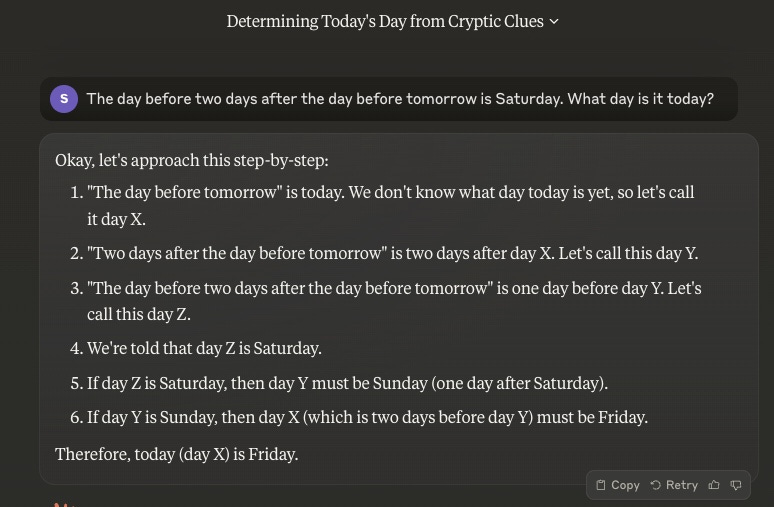

A new chatbot called the “gpt2-chatbot” has appeared on LMSys’ chatbot arena and spurred quite a conversation on X. We tried it on a few reasoning tasks and it is undoubtedly very impressive! Not only did it get the answers right but also the structure of the output was very clean and it walked through all the steps. The capabilities seem similar to or even better than GPT-4 Turbo and Claude-3.

On trying to peek under the hood, the chatbot revealed the following details:

-

It is powered by OpenAI’s GPT-4

-

Its training includes data up until November 2023.

-

The context window is 8,192 tokens

On a reasoning question, it also beats Llama 3-70B-Instruct model.

While some say that it might be OpenAI testing its latest AI model, probably GPT 4.5 or GPT-5, in stealth. Who knows these speculations might be true!

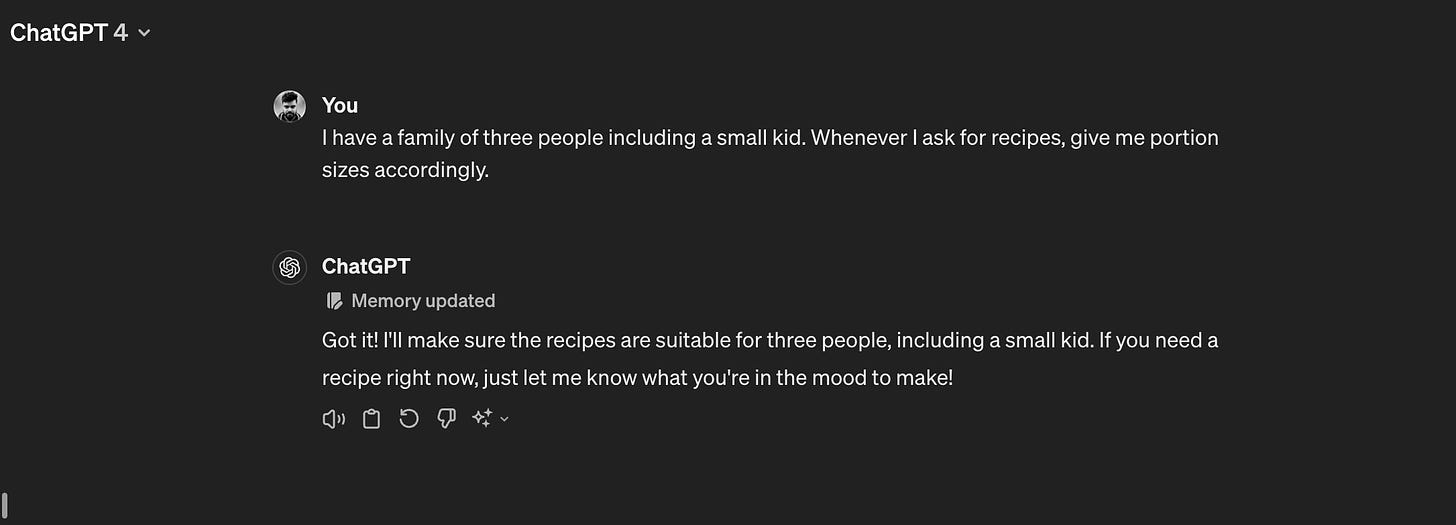

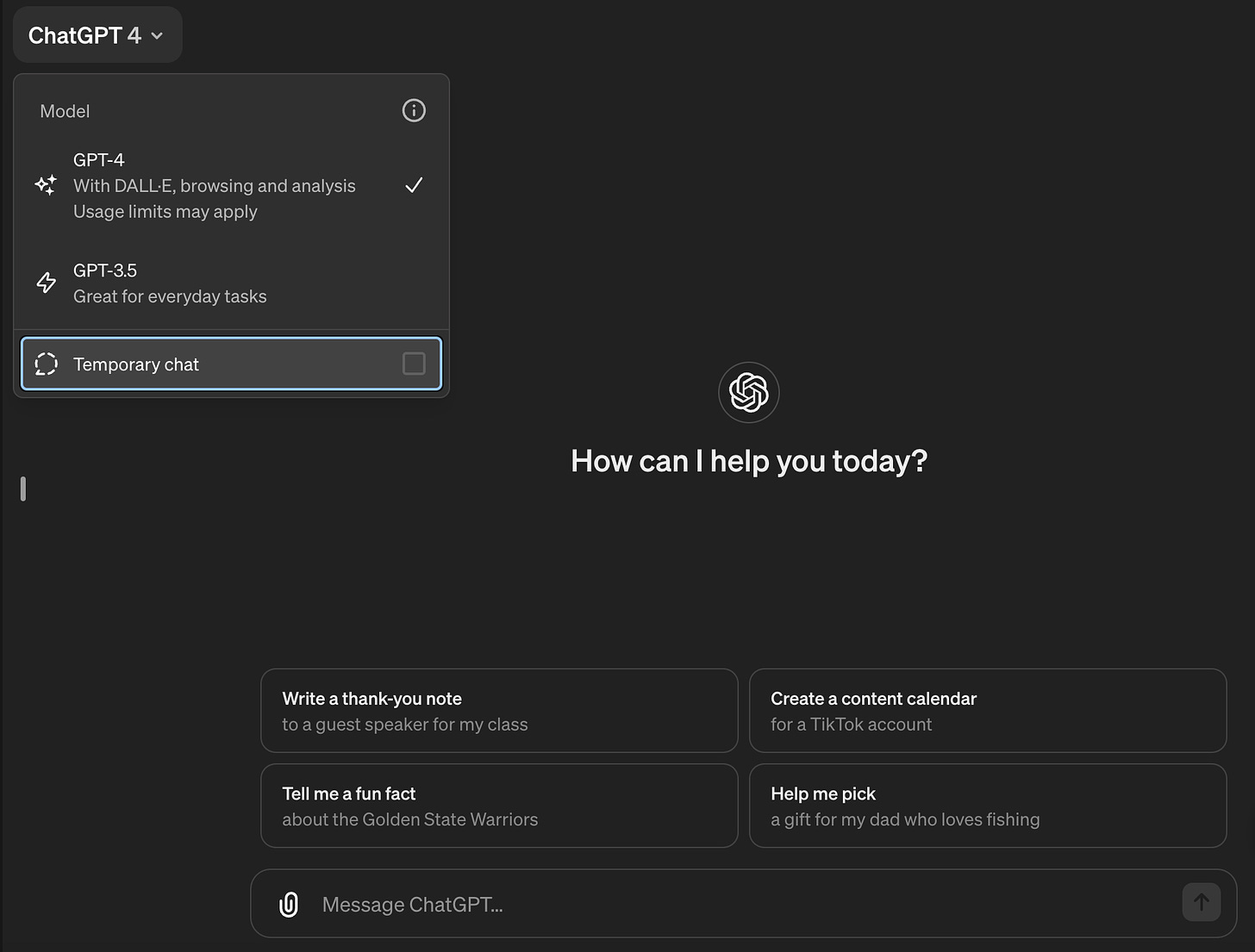

In February, OpenAI started testing its new “Memory” feature in ChatGPT where it will remember things discussed in conversations to make future interactions more helpful. It was rolled out to select ChatGPT Plus and Enterprise users then. OpenAI has now made it available to all ChatGPT Plus users. The Memory feature makes your conversations with ChatGPT progressively more personalized.

To recap:

-

You can add a Memory by just telling ChatGPT in your chat what you want it to remember.

-

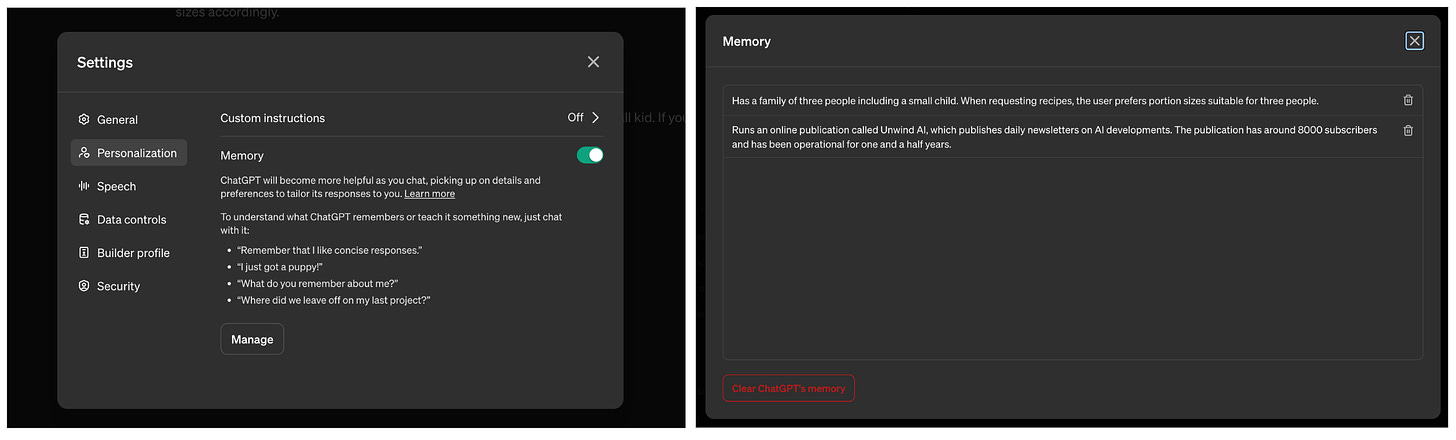

You are completely in control of ChatGPT’s memory. You can switch it off in the settings. If you want ChatGPT to forget something, just tell it. You can also view and delete specific memories or clear all memories in settings.

-

If you’d like to have a conversation without using memory, use ‘Temporary chat’. Temporary chats won’t appear in history, won’t use memory, and won’t be used by OpenAI to train models.

-

Custom GPTs will have separate memories, and builders have the option to enable it for their GPTs. You might need to repeat details to different GPTs since each GPT has its own memory.

GitHub was one of the first platforms to integrate AI into the developer experience. Starting from 2022 when GitHub Copilot was an auto-completion tool, to Copilot Chat for coding, debugging, and testing code with natural language, it has evolved rapidly. Integrating AI further into the development cycle, GitHub has now launched GitHub Copilot Workspace that lets developers transition from idea, to code, to software. It targets the biggest pain point – figuring out how to approach the problem!

Key Highlights:

-

Developers can brainstorm, plan, build, test, and run code all in natural language within this environment. This experience leverages different Copilot-powered agents from start to finish, integrating all stages of software development.

-

Developers can start projects from GitHub Issues, Pull Requests, or Repositories, progressing to a detailed, editable plan that moves seamlessly into coding and deployment within the same environment.

-

While the workspace is powered by AI, developers retain complete control over their projects. All aspects of the proposed solution by Copilot Workspace are fully editable.

-

The Copilot Workspace meets developers right at the origin: a GitHub Repository or a GitHub Issue. From there, it offers a step-by-step plan to solve the issue on the basis of the codebase, issue replies, and more.

Transformer models like GPT-4 and Gemini have been incredibly useful for various tasks, but they often stumble in complex problems requiring extensive reasoning or composition. This stems from a fundamental issue: they are not Turing Complete. This means their computational capabilities are restricted, which prevents them from generalizing to new situations and handling tasks beyond pattern recognition.

A new study proposes a solution to this: Find+Replace transformers. This architecture, inspired by multi-agent systems, utilizes multiple interacting transformers and overcomes the limitations of single-transformer models.

Key Highlights:

-

Turing Completeness for LLMs: Being Turing Complete means an LLM can, in theory, solve any computational problem given enough time and resources. This is crucial for achieving true intelligence, as the models then would be able to tackle complex tasks that require reasoning, planning, and adaptation.

-

Why Current LLMs Fall Short: Traditional transformers have a fixed architecture with a limited “context window” and a finite set of possible states. This restricts the amount and complexity of computations they can perform.

-

Find+Replace Transformers: This innovative architecture involves multiple transformers working together by acting on different segments of input data sequentially and then integrating the results, negating the limitations of a single model.

-

Promising Results: Find+Replace transformers demonstrate superior performance compared to GPT-4 in various challenging tasks, including Tower of Hanoi, multiplication problems, and dynamic programming challenges.

This paper has the potential to be as impactful as the Attention Is All You Need paper, which introduced the transformer architecture. Addressing the fundamental limitation of these models, it opens up new avenues for LLM R&D, better capabilities, and possibly AGI.

😍 Enjoying so far, share it with your friends!

-

Hunch: Connect and chain various AI models visually with no-code to create custom workflows and automate complex tasks. You can use it for brainstorming, writing, design, and more. You can choose from a variety of AI models available on the platform, including those from OpenAI, Anthropic, Mistral, and Google, combining the best capabilities of each model for your workflow.

-

tldraw-3D: A drawing tool that lets you visualize and manipulate 3D information on a 2D design canvas using a 3D “underlay”. This helps you get a clearer understanding of depth, movement, and spatial relationships, to help you plan and understand spatial dynamics.

-

Instaclass: Make a masterclass on anything with the best content on the internet. It pulls in information from across the web on a particular topic and puts it in a course format so you can go in with no idea about a topic and come out decently well-informed.

-

Voiceflow: Allows building AI agents that let teams automate complex workflows, create custom integrations, and deploy conversational AI across various use cases. It offers a visual workflow builder for collaborative design and development, extended with APIs for customization, making it suitable for enterprises needing scalable, adaptable, and controlled conversational AI solutions.

-

i do have a soft spot for gpt2 ~

Sam Altman -

If they managed to bring a 1.5b model to the level of GPT-4, this would change the world. This would be the singularity. Unless they already have ASI retraining GPT-2, I just can’t imagine this. Also: Why would it be so slow and limited to 8 prompts? GPT-2 is super cheap to run.~

Flowers from the future -

OpenAI is the best hype-creation engine of our times

They are constantly ginning up all kinds of theories about AGI!

Claiming major improvements, threatening to steamroll start-ups and making up stories about AI sentience ~

Bindu Reddy

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!