Today’s top AI Highlights:

-

The Next Evolution in Bimanual Teleoperation by Google

-

Text to SQL Code Surpassing GPT-4 and Claude 2

-

Irrelevant Documents Enhance RAG Systems Accuracy by Over 30%

-

The first-ever text to UI platform

& so much more!

Read time: 3 mins

Diverse demonstration datasets are important in driving advancements in robot learning, yet the scope and finesse of such data often hit a bottleneck due to factors like hardware costs, durability, and the simplicity of teleoperation systems. Addressing these challenges, Google introduces ALOHA 2 that boasts enhanced performance, better ergonomics, and increased robustness over its predecessor. In a move to spur research in large-scale bimanual manipulation, the entire hardware design, accompanied by a comprehensive tutorial and a detailed MuJoCo model for system identification have been open sourced.

Key Highlights:

-

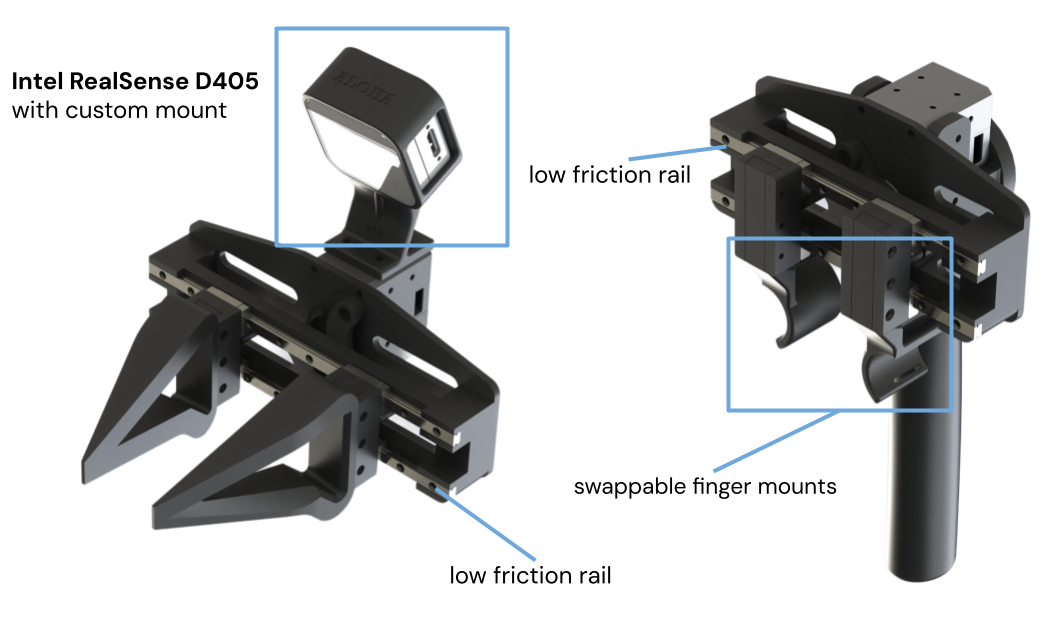

ALOHA 2 marks a leap in teleoperation technology with improvements such as new low-friction rail grippers for both leader and follower roles, enhancing the control and fluidity of operations. The introduction of upgraded griptape on the grippers also improves the handling and durability when manipulating small objects, enabling a wider variety of tasks to be performed with greater precision.

-

With a reimagined gravity compensation mechanism and a streamlined frame design utilizing 20x20mm aluminum extrusions, ALOHA 2 aims to minimize maintenance downtimes. The redesign allows for more space around the workcell, facilitating human-robot collaboration and the use of larger props for data collection, thus enhancing the robustness and flexibility of the system.

-

ALOHA 2 integrates smaller Intel RealSense D405 cameras with custom 3D-printed mounts into its design, significantly reducing the footprint of the follower arms and enabling a broader manipulation workspace. These cameras, with their larger field of view, depth sensing, and global shutter capabilities, offer advanced vision solutions that not only facilitate more complex manipulation tasks but also improve the quality of data collection for research purposes.

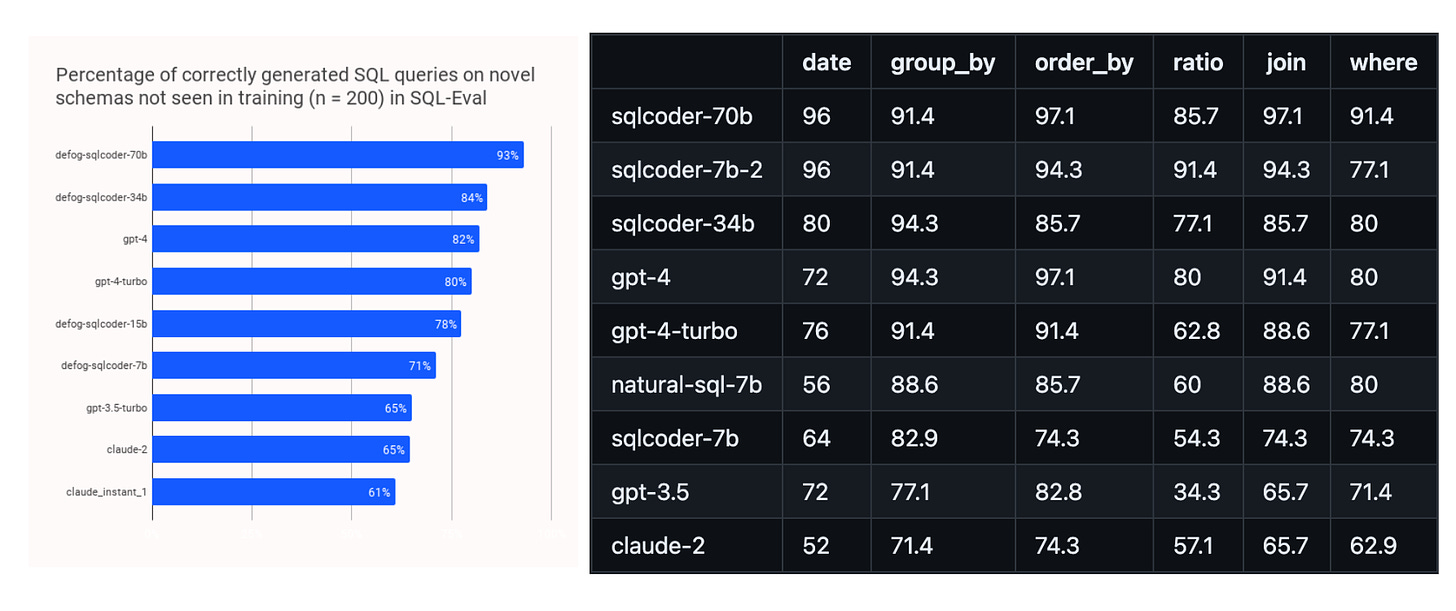

Defog has unveiled SQLCodera family of LLMs that are setting new standards in text to SQL queries. With a training set of over 20,000 human-curated questions, SQLCoder demonstrates a remarkable capability in understanding and translating complex queries across various schemas, outperforming even GPT-4 and GPT-4 Turbo.

Key Highlights:

-

The series includes SQLCoder-70B, SQLCoder-7B-2, and SQLCoder-34B, each offering unique strengths in SQL query generation. For instance, SQLCoder-70B achieves an impressive 96% accuracy in date-related queries and 97.1% in join operations. These models significantly outperform leading alternatives, including GPT-4, GPT-4-Turbo, Claude 2, and other models, across multiple categories.

-

The models were rigorously evaluated using the sql-eval framework, categorizing questions into six distinct areas to thoroughly test their SQL generation capabilities. The framework’s detailed analysis reveals SQLCoder’s strengths, with SQLCoder-7B-2 and SQLCoder-34B also showing strong performances in areas like ratio and group_by queries, highlighting the models’ versatility and efficiency in processing complex SQL tasks.

-

The development of SQLCoder involved a curated dataset comprising more than 20,000 questions based on 10 different schemas, ensuring a broad coverage and deep understanding of varied SQL query requirements.

Recent research in Retrieval-Augmented Generation (RAG) has predominantly concentrated on the generative aspect of LLMs within RAG systems. A new analysis deviates from the traditional focus to investigate the impact of Information Retrieval (IR) components on RAG systems. Surprisingly, the findings suggest that including what is typically considered ‘noise’—irrelevant documents—can unexpectedly boost system accuracy by over 30%!

Key Highlights:

-

The research delves into the essence of RAG systems, specifically the role of the retriever in optimizing prompt construction for effective language generation. It categorizes documents into relevant, related, and irrelevant, uncovering that irrelevant documents, contrary to previous assumptions, can significantly improve the accuracy of RAG systems.

-

Through rigorous testing across several LLMs including Llama 2, Falcon, Phi-2, and MPT, the study employs a variety of experimental setups to scrutinize the effects of document relevance and placement on model accuracy. It was found that related documents decrease model accuracy more than irrelevant ones. The research further explores the impact of document positioning and introduces the concept of ‘noise’ in the form of irrelevant documents, which, in some configurations, led to an accuracy improvement of up to 35%.

-

The findings from this study suggest a nuanced approach to the retrieval process, where the inclusion of a certain amount of irrelevant information could be beneficial, urging a reevaluation of current information retrieval strategies. Additionally, the research opens new avenues for future studies to explore why seemingly unrelated documents enhance RAG system performance.

-

Galileo AI: Text to UI platform for quick and easy design ideation for both mobile and desktop interfaces. You can generate aesthetically pleasing designs rapidly.

-

Vision Arena: Test multiple vision language models at once, side-by-side. Currently supports GPT-4 Vision, Gemini Pro Visionb, Llava-1.5, Qwen-VL-Chat, and CogVLM-Chat. More models are coming soon.

-

WhisperSpeech: An open source text-to-speech system developed by Collabora. It is designed by inverting the functionality of Whisper. It is like Stable Diffusion but for speech – both powerful and easily customizable.

-

Make With AI: A starter kit for iOS developers looking to integrate OpenAI technologies into their apps. The kit features production-ready sample code and pre-built app UIs for six different SwiftUI AI sample apps, including integrations with OpenAI’s DALLE-3, Whisper, and more.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

It’s actually quite incredible to be alive at this moment. It’s hard to fully absorb the enormity of this transition. Despite the incredible impact of AI recently, the world is still struggling to appreciate how big a deal its arrival really is. We are in the process of seeing a new species grow up around us. Getting it right is unquestionably the great meta-problem of the twenty-first century. But do that and we have an unparalled opportunity to empower people to live the lives they want. ~ Mustafa Suleyman

-

What strikes me is that more developers are working on retrieval problems than ever before because of LLMs ~ Yes, Kristian Bergum

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![yIwaYr89eF_qoeJs.mp4 [video-to-gif output image] yIwaYr89eF_qoeJs.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F02b48d5d-88e3-49f4-8805-81d10029728b_600x338.gif)