Today’s top AI Highlights:

-

Assembly AI’s new highly accurate, multilingual speech-to-text AI bets Whisper-Large

-

Stability AI releases Stable Audio 2.0 amid the corporate chaos

-

Anthropic research on “many-shot jailbreaking” bypasses the top AI models

-

Brave browser’s AI assistant comes to iOS with a special feature

-

Generate an entire novel with cover art from a single prompt

& so much more!

Read time: 3 mins

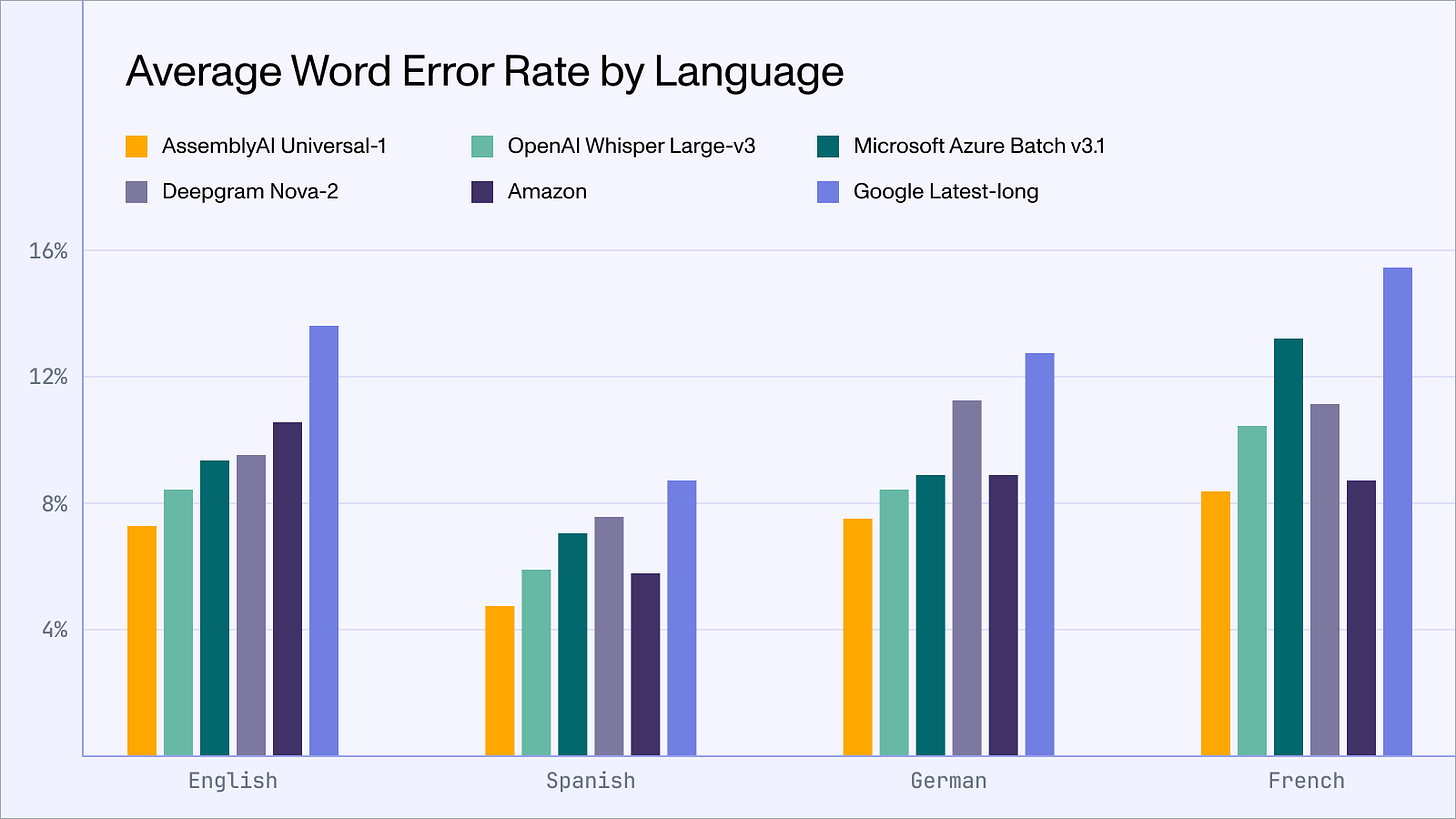

Assembly AI just released a highly accurate, multilingual speech-to-text AI model Universal-1trained on over 12.5 million hours of multilingual audio data. It is trained on four major languages: English, Spanish, French, and German, and shows a high speech-to-text accuracy in almost all conditions, including heavy background noise, accented speech, natural conversations, and changes in language. It beats OpenAI Whisper Large and NVIDIA Canary on several benchmarks.

Key Highlights:

-

Outperforms Rivals: Superior to Whisper Large v3 and Azure Batch v3.1 in robustness and precision.

-

Better Accuracy: Exceeds competitors by over 10% in key language accuracy.

-

Reduced Hallucination: Hallucination rate reduced by 30% on speech data and by 90% on ambient noise over Whisper Large-3.

-

Preferred by Users: Chosen over previous models by 71% of users for better accuracy.

-

Enhanced Timestamp and Diarization: Improves timestamp accuracy by 13% and speaker count by 71%.

-

5x Faster Processing: Achieves transcriptions 5x faster with efficient parallel inference.

-

Multi-Language Transcription: Enables transcription of multiple languages in a single file.

LLMs now can process huge chunks of text with their expanded context window. Gemini 1.5 for example can ingest up to 1 million tokens which would be ~ several long novels! But this opens doors to more potential for jailbreaking them. Researchers at Anthropic have released a paper on how “many-shot jailbreaking,” that is, feeding the LLM a sequence of crafted dialogues, can bypass the AI’s built-in safety checks.

The simplicity of this method, its effectiveness, and its ability to scale to longer context windows make LLMs by companies like Anthropic itself, Google DeepMind, and OpenAI more vulnerable.

Key Highlights:

-

Method Explained: Giving multiple fake conversations into a single prompt to manipulate the AI into answering a final harmful question. This bypasses the safety measures meant to prevent such responses.

-

Vulnerable Models: Larger LLMs, capable of processing vast information, are more susceptible. Their advanced in-context learning capabilities make them more likely to be influenced by faux dialogues.

-

Effectiveness: Its effectiveness increases with the number of dialogues included. Tests show that adding over 256 dialogues can significantly raise the chances of the AI producing an unsafe response.

-

Mitigation: Companies are employing strategies like model fine-tuning and implementing prompt-based intervention to classify and modify prompts before processing. These efforts have seen varied success, reducing the attack success rate from 61% to 2%.

Stability AI has released the latest iteration of its text-to-speech model, Stable Audio 2.0. The new model lets you generate 3-minute-long audio from simple text prompts, that can be downloaded in 44.1 kHz stereo. The length is double the earlier version’s ability that generates 90 seconds-long audio. This extension allows for audio that has a proper intro, progression, and conclusion for a more complete listening experience.

Key Highlights:

-

Audio-Audio: Stable Audio 2.0 also lets you upload an audio sample, be it beats or vocals, which can be morphed into a new audio piece guided by a text prompt.

-

Architecture: It builds on Stable Audio 1.0, launched in September 2023, which is based on the latent diffusion technology. The model uses a highly compressed autoencoder and a diffusion transformer (DiT).

-

DiT processes longer sequences by refining random noise into structured data, identifying patterns and relationships for a deeper and more accurate interpretation of inputs.

-

Training Data: 2.0 is trained on data from AudioSparx which has 800,000+ audio files containing music, sound effects, and single-instrument stems.

-

Availability: The model is free to use and the API will be available soon.

Our Opinion: The audio-to-audio demo didn’t seem very impressive and coherent yet, and the ability to granularly edit the audio is also missing.

Let us know your thoughts in the comments below! 👇

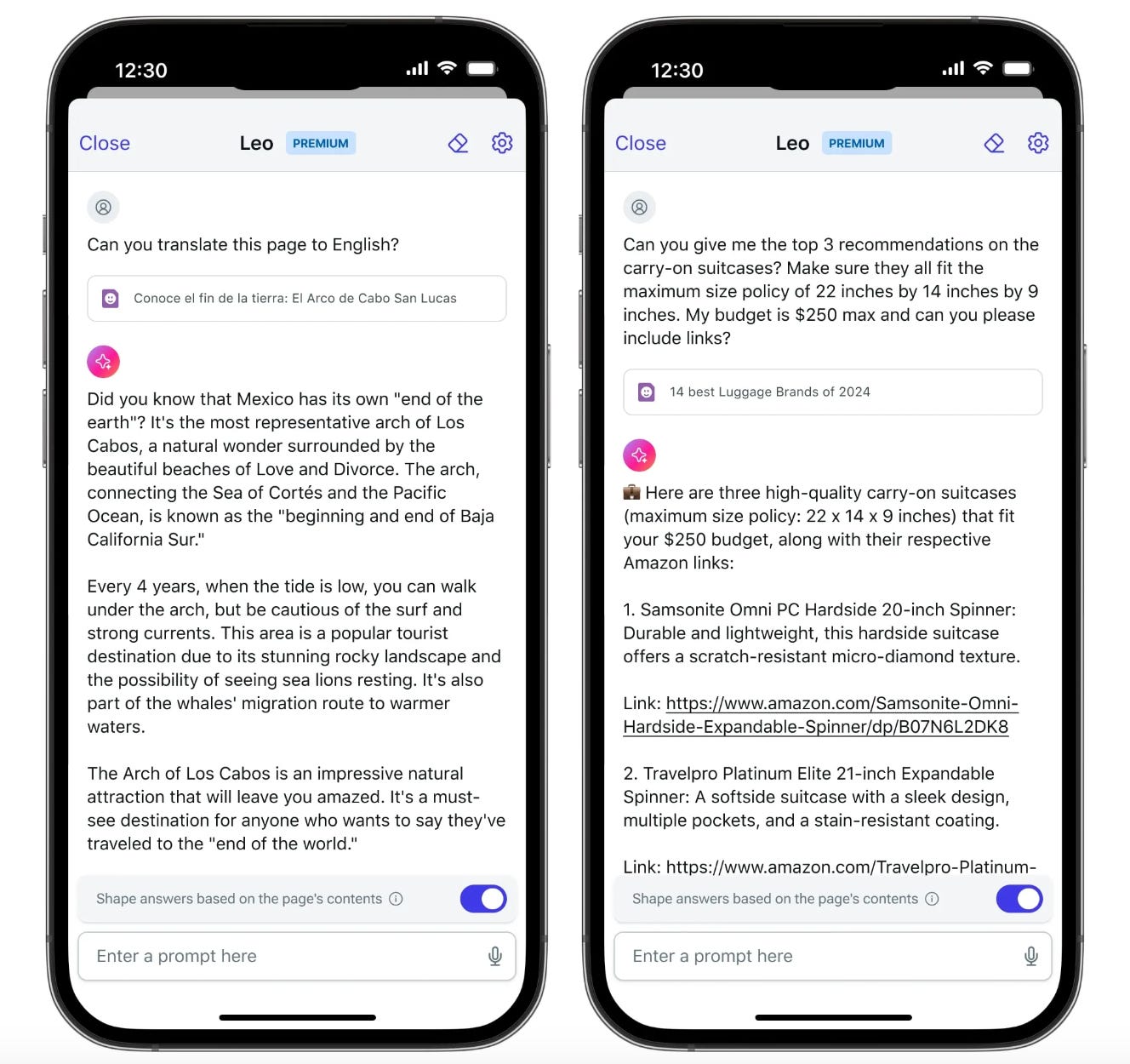

Brave browser’s AI assistant Leo has come to iOSwhere you can ask Leo questions, summarize pages and PDFs, translate pages, create content, and more from the browser address bar. While it is available on Android too, iOS users have an added voice-to-text feature.

Key Highlights:

-

Capabilities: You can summarize webpages and PDFs, ask questions about the content, generate content, translate pages, analyze them, and write and suggest software code for multiple languages.

-

Integrations: Leo is also integrated with Google Docs and Sheets for boosting productivity.

-

Models and Languages: Leo lets you access Mixtral 8x7B (by default), Claude Instant, and Llama 2 13B. You can interact in multiple languages including English, French, German, Italian, and Spanish.

-

Privacy: Leo ensures privacy by processing inputs anonymously, not recording chats or using them for model training.

😍 Enjoying so far, share it with your friends!

-

Claude-Author: Turn a simple prompt into a complete, readable novel, including cover art, packaged as an e-book, in minutes. You can provide an initial prompt and enter how many chapters you want in it, and Claude-Author generates an entire novel using a chain of Anthropic’s Claude 3 Haiku/Opus and Stable Diffusion API calls.

-

Diffuse: Create AI-generated videos with just one selfie! Select your picture, describe what you’re doing and the location in a simple language prompt, that’s it! It’s powered by Huggsfield’s video foundation model (which is still developing) that allows for realistic motion in the videos and high personalization.

-

Pretzel: An opensource & AI-native replacement for Jupyter Notebooks. It features native AI tools integration like GitHub Copilot and LLMs, built-in real-time collaboration akin to Google Docs, and an IDE-like experience with support for both SQL and Python, for reactivity and reproducibility of notebooks.

-

Keywords AI: A unified DevOps platform to build AI applications with all necessary features for development, deployment, and scaling. The platform is tailored for developers working with LLMs, providing a unified interface for various models and enabling quick integration with OpenAI.

-

you have to either care about fertility collapse or believe in AGI coming soon i dont see a third option ~

there -

it’s 2024. i still don’t know what cloudflare does. ~

close up -

It is remarkable how people are willing to turn over their most intimate & personal writing tasks to AI first. People often tell me their first use of ChatGPT was for wedding toasts or a story for their kids or condolence note

I wouldn’t have expected that as the first use case ~

Ethan Mollick

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!