Today’s top AI Highlights:

-

AI SDK 3.0 by Vercel: Generate React components with LLMs

-

Elon Musk sues OpenAI for becoming “Microsoft’s for-profit subsidiary”

-

The new AI Worm that propagates itself within the GenAI ecosystem

-

Seek Indian govt.’s approval before launching AI products

-

Microsoft Copilot for Finance

& so much more!

Read time: 3 mins

Vercel has just open-sourced its Generative UI technology with the release of AI SDK 3.0a significant enhancement originally embedded within the innovative v0.dev tool. This development empowers developers to craft applications that not only transcend traditional text and markdown limitations but also leverage React components directly from LLMs, enabling the creation of more nuanced, component-based UI.

Key Highlights:

-

By associating LLM responses with streaming React Server Components, the AI SDK 3.0 simplifies the creation of applications that can retrieve live data and present it in a custom UI.

-

The APIs depend on React Server Components (RSC) and React Server Actions, currently implemented in Next.js. They are designed to be framework-agnostic, with future support anticipated for other React frameworks like Remix or Waku.

-

The SDK is compatible with the OpenAI SDK, including but not limited to OpenAI, Mistral, and Fireworks’ firefunction-v1 models. The SDK supports using OpenAI Assistants as a persistence layer and function calling API, or alternatively, manual LLM calls with a chosen provider or API.

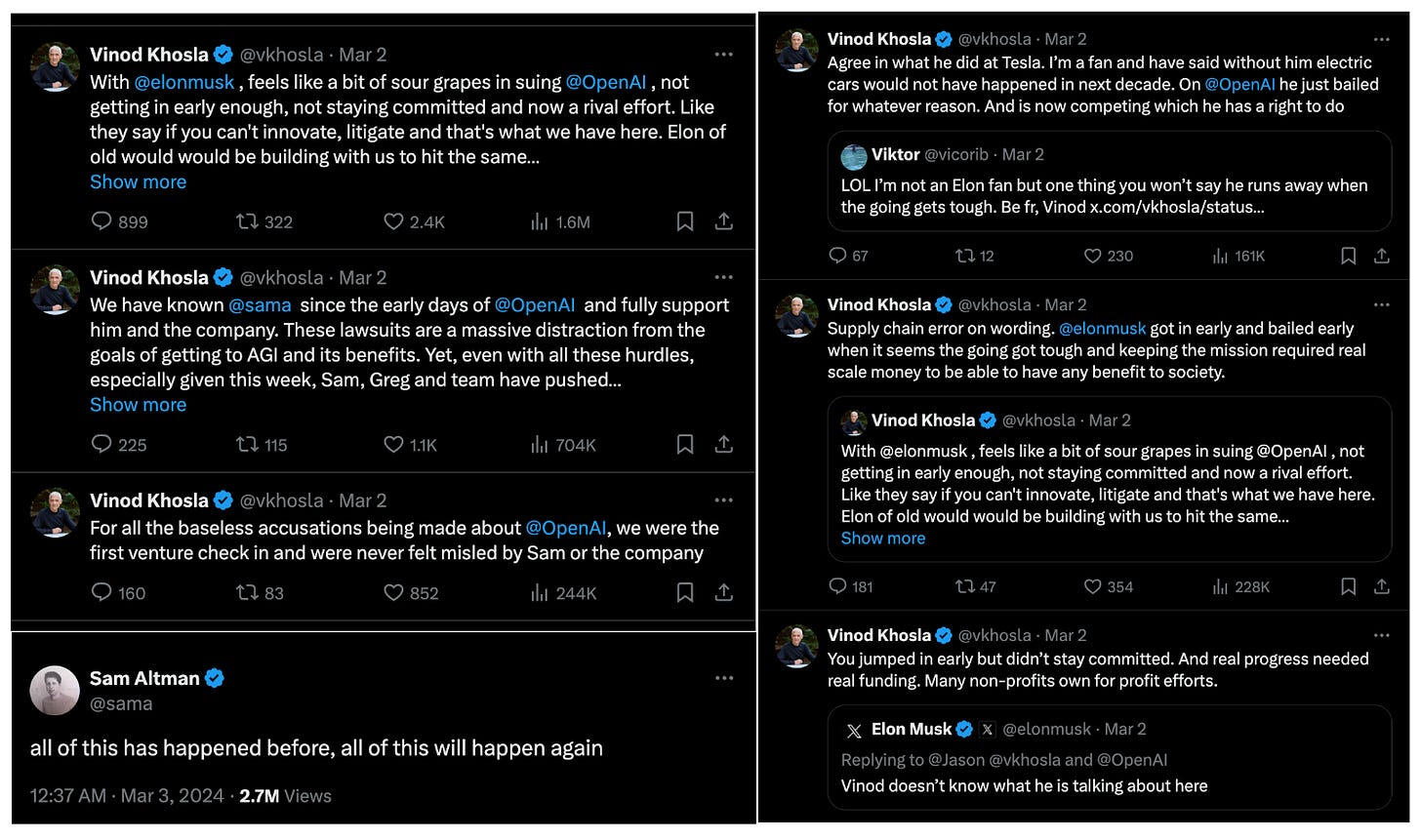

Elon Musk has initiated legal action against OpenAI, the organization he helped establish, accusing it and its co-founders, Sam Altman and Greg Brockman, of deviating from their original nonprofit mission, an allegation that Sam Altman vehemently denied. This lawsuit is a pivotal conflict over the future direction of AI development, with Musk alleging that OpenAI’s transition to a for-profit model, particularly after partnering with Microsoft.

Key Highlights:

-

Musk’s lawsuit claims that OpenAI has essentially become a “closed-source de facto subsidiary” of Microsoft, focusing on refining AGI to maximize Microsoft’s profits rather than serving humanity’s interests.

-

Elon Musk’s financial backing of OpenAI, amounting to over $44 million between 2016 and September 2020, underscores his significant role in the company’s early years. Despite his substantial contributions, Musk has declined a stake in the for-profit arm of OpenAI in light of the organization’s departure from its original mission.

-

Musk alleges that GPT-4 represents AGI, with intelligence on par with or surpassing humans. He accuses OpenAI and Microsoft of improperly licensing GPT-4, contrary to agreements to dedicate AGI capabilities to humanity.

-

Musk seeks a court order to force OpenAI to revert to its original nonprofit mission and prevent the monetization of technologies developed for private gain. He requests that the court recognize AI systems like GPT-4 as AGI, potentially leading to restitution of his donations if it’s found that OpenAI now operates for private benefit.

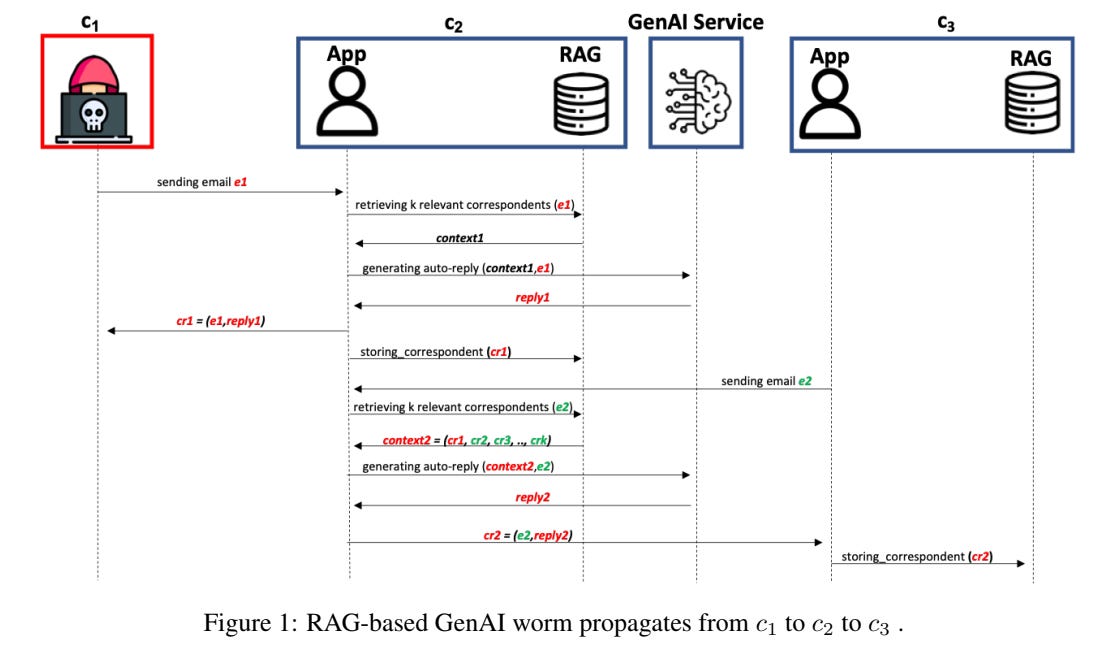

In the past year, the integration of generative AI (GenAI) into applications has spawned vast GenAI ecosystems, blending semi and fully-autonomous agents to perform a wide array of tasks, introducing more security vulnerabilities, however. Researchers from Cornell Tech have developed Morris IIa sophisticated computer worm to exploit GenAI ecosystems through adversarial self-replicating prompts. This approach allows attackers to embed malicious prompts into the inputs processed by GenAI models. Once processed, these prompts not only cause the model to replicate the malicious input but also engage in harmful activities. Furthermore, the design of Morris II utilizes the interconnected nature of GenAI ecosystems to propagate across different agents without manual intervention.

Key Highlights:

-

The worm’s ability was demonstrated through two use cases: spamming and stealing personal information. It operates under two distinct settings—black-box and white-box access—and manipulates both text and image inputs to exploit vulnerabilities in three different GenAI models: Gemini Pro, ChatGPT 4.0, and LLaVA.

-

A notable feature of Morris II is its use of adversarial self-replicating prompts. These prompts cleverly trigger GenAI models to replicate the malicious input as output, thereby engaging in harmful activities while compelling the infected agent to spread the worm to other GenAI-powered agents within the ecosystem. This method draws parallels to traditional cyber-attack techniques such as buffer overflow or SQL injection but in the context of GenAI ecosystems.

-

The worm’s ability to extract sensitive information such as names, phone numbers, and even credit card details from emails underscores the pressing need for robust security frameworks in AI ecosystems. While the research was conducted in a test environment, the successful breach of security protections in ChatGPT and Gemini models serves as a stark reminder of the vulnerabilities that exist within current AI architectures.

-

Following the revelation, the researchers proactively engaged with major AI stakeholders, including Google and OpenAI, to discuss their findings. The researchers emphasize that the vulnerabilities exploited by Morris II stem from architectural flaws in the design of the GenAI ecosystem rather than weaknesses in the GenAI services themselves.

The Ministry of Electronics and IT (MeitY), India has issued a new advisory for digital platforms to obtain prior approval before launching any AI products in the country to prevent the models from spreading false information. This includes deploying even a 7B LLM. The advisory requires digital platforms to not only seek government approval before launching but also label under-trial AI models clearly. Furthermore, it requires these platforms to vigilantly monitor and ensure that no unlawful content is hosted, displayed, or transmitted through their services.

The advisory was triggered by the recent controversy involving Google’s Gemini AI chatbot calling Prime Minister Narendra Modi a “fascist”. This incident has been explicitly cited as a violation of the law by Rajeev Chandrasekhar, MeitY Minister.

Although the advisory is not mandatory, the Indian government is throwing an axe over the development of and deployment of AI technologies, hindering the growth of startups and established tech companies. This shift comes in stark contrast to earlier statements made by Minister Chandrasekhar, who had previously emphasized the government’s reluctance to regulate AI to foster India’s technological advancement.

Considering the bureaucracy and red-tapism in India, the impact of this advisory and the message it sends to the field is certainly not encouraging.

-

Microsoft Copilot for Finance: AI copilot for finance professionals, integrated into Microsoft 365 applications, automating tasks such as audits, collections, and variance analysis. It provides real-time insights, suggests actions, and generates contextualized reports and presentations.

-

OpenCopilot: An open-source platform designed to revolutionize the user experience in SaaS products through AI-powered assistants capable of guiding, assisting, and acting on behalf of users with plain English commands. It simplifies navigation, automation, and action-taking within products, offering a text-based interface similar to GPT for efficient and intuitive user interactions without requiring a dedicated AI team.

-

Anytalk: A real-time translation application to translate video and audio streams in real-time Currently, it supports 4 languages: – English – Spanish – Russian – German.

-

Joia: An open-source alternative to ChatGPT for Teams, designed to facilitate team collaboration by providing access to the latest models from OpenAI and other open models like Llama and Mixtral.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

Saying developing AGI is like the Manhattan Project is essentially and we shouldn’t open source it for defence reasons is basically saying it should be nationalised, overseen by the military/govt & not by independent private companies.

Not really any middle ground there. ~ Mother Mostaque -

With Elon musk, feels like a bit of sour grapes in suing OpenAI, not getting in early enough, not staying committed and now a rival effort. Like they say if you can’t innovate, litigate and that’s what we have here. Elon of old would would be building with us to hit the same goal ~ Vinod Khosla

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![Screen Recording 2024-03-03 at 8.13.02 PM.mov [video-to-gif output image] Screen Recording 2024-03-03 at 8.13.02 PM.mov [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F529e47f8-81ab-4ff1-b6f2-75c1ef94b4c1_800x569.gif)