Today’s top AI Highlights:

-

Alibaba brings portraits to life with an image and an audio

-

Ideogram’s text-to-image model with the most advanced text rendering

-

Meta to release Llama 3 in July 2024

-

The era of 1-bit LLMs: all LLMs are in 1.58-bits

-

Control every aspect of your video using AI, from ideation to final edits

& so much more!

Read time: 3 mins

We are witnessing huge releases and research on generative AI in digital art. With OpenAI releasing Sora, and Pika Labs releasing Lip Sync yesterday, here is another framework that can create portrait videos of characters speaking with an unmatched level of coherence. Researchers at Alibaba have introduced EMO (Emote Portrait Alive)a cutting-edge framework that lets you create videos from a single image of any portrait and vocal audio. In no time, you would see your character coming alive, talking or singing, with accurate lip sync, poses, and very human-like expressions.

Key Highlights:

-

It can generate videos with any duration depending on the length of the audio file. No more limited to short-length videos.

-

The framework can animate both spoken and song audio, and bring to life portraits from different historical periods, paintings, and both 3D models and AI-generated content. It also enables cross-actor performances, allowing movie characters to deliver monologues or performances in someone else’s languages and styles.

-

The method supports audio in various languages, recognizing tonal variations to generate expression-rich avatars. EMO ensures synchronization of fast-paced rhythms with dynamic character animations, accommodating rapid lyric delivery.

Check all the demo videos they are really interesting. Notice the expressions, eyebrows, head poses, earrings, and even the throat in this one!

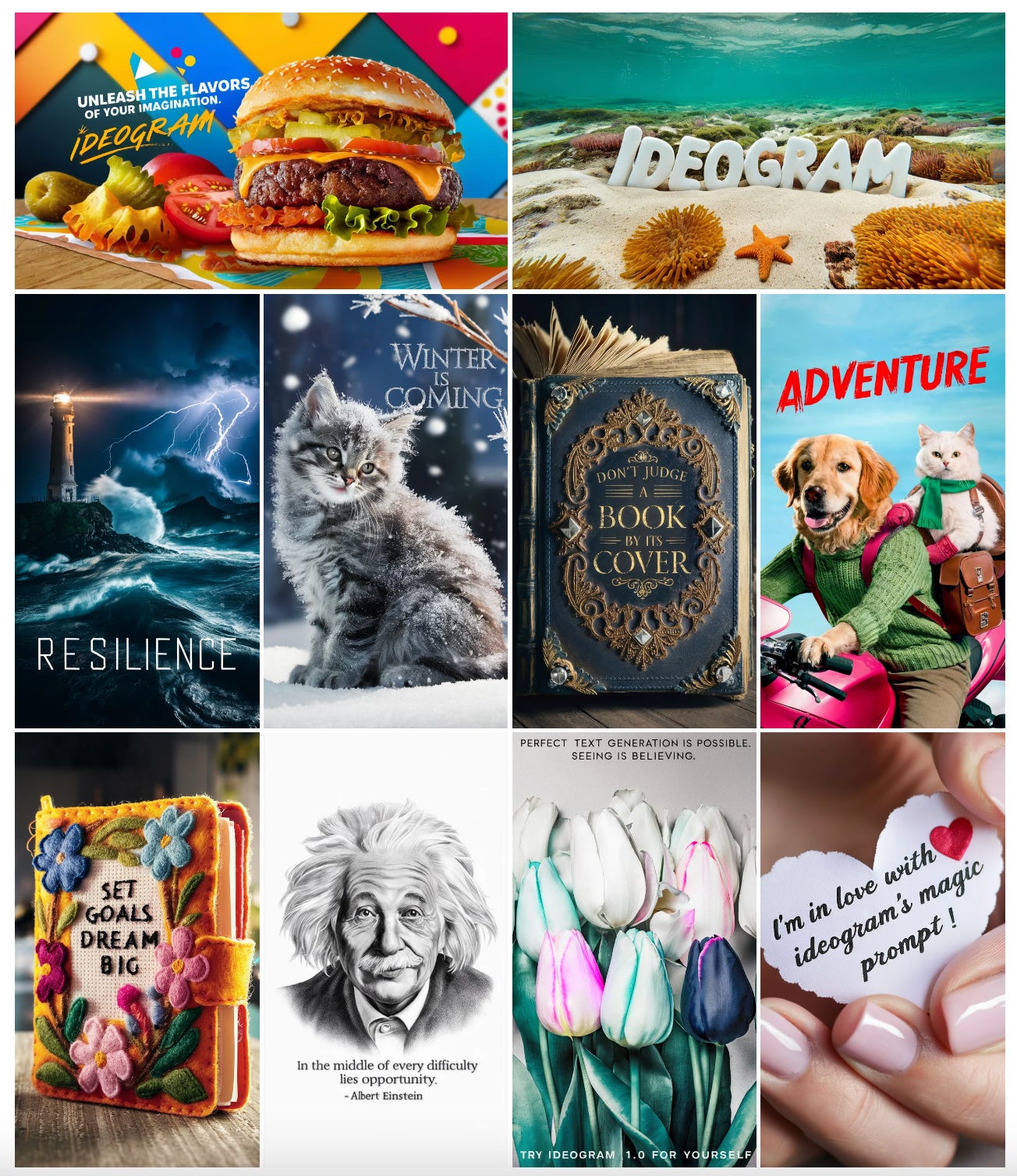

Just as we just spoke to generative AI in digital art, here is another text-to-image model by Ideogram, Ideogram 1.0. The model stands out for its state-of-the-art text rendering, unprecedented photorealism, and coherence to prompts, even when they are long or complex. It supports a variety of aspect ratios and styles, including photorealistic and artistic outputs, offering flexibility and creativity in image generation.

Key Highlights:

-

Ideogram 1.0 significantly reduces text rendering error rates by nearly 2x compared to existing models. This facilitates creation of personalized messages, memes, posters, T-shirt designs, birthday cards, and logos with unparalleled accuracy.

-

The platform also comes with Magic Prompt, your personal creative assistant that automatically enhances, extends, and translates prompts to produce more beautiful and creative images.

-

When assessed against major competitors like DALL·E 3 and Midjourney V6, Ideogram 1.0 was preferred by human raters for its prompt alignment, image coherence, overall preference, and text rendering quality.

Meta is reportedly set to release Llama 3, its latest version of LLM, in the coming July. The aim is to improve responses by “loosening up” the model and understanding the nuances of language humans use. For instance, Llama 2 avoids answering controversial questions such as how to prank a friend, win a war or kill a car engine. But Llama 3 would be able to understand questions such as ‘how to kill a vehicle’s engine’, which means how to shut it off rather than end its life.

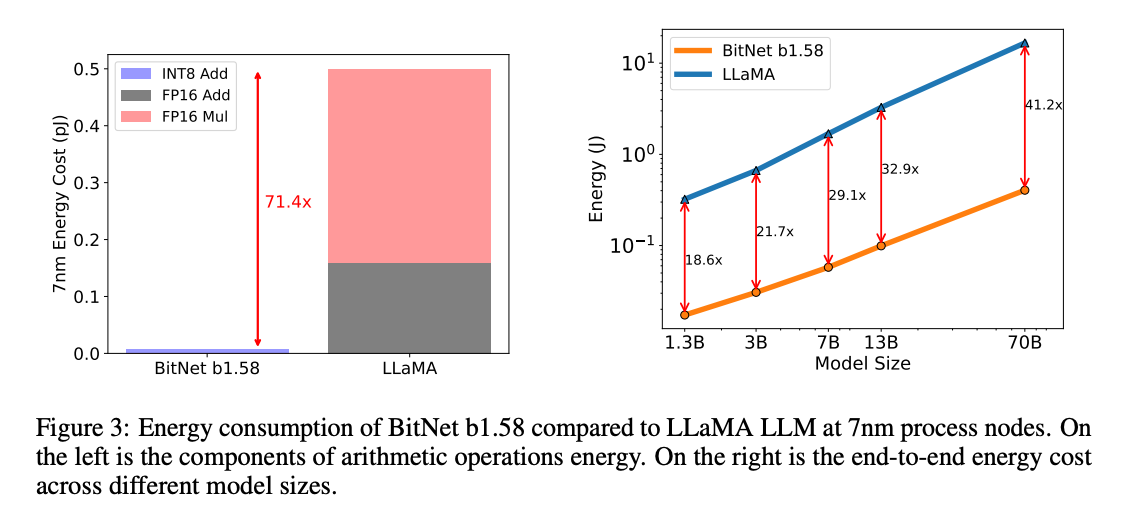

The LLMs world is on a transformative journey as the need is to make these models more sustainable, efficient, and easier to deploy. Microsoft has released BitNet b1.58a model that operates with just 1.58 bits per parameter, (‘bit’ represents the basic unit of information in computing), showcasing a method to drastically reduce computational resources while maintaining high performance. The model is able to retain the capabilities of traditional 16-bit models with far less energy and memory use.

Key Highlights:

-

BitNet b1.58 matches the full-precision models in perplexity and end-task performance, starting from a model size of 3 billion parameters. This is achieved with a notable reduction in the model’s memory footprint, making it significantly more efficient in terms of latency, throughput, and energy consumption.

-

With parameters encoded in a simple ternary system of {-1, 0, 1}, BitNet b1.58 minimizes the need for costly floating-point operations. This shift towards integer addition for matrix multiplication not only reduces energy costs but also paves the way for faster computations, addressing the power limitations that often bottleneck performance in many chips.

-

The 1.58-bit configuration significantly decreases the model’s memory requirements, easing the transfer of parameters to on-chip accelerators like SRAM. This reduction in memory and bandwidth requirements could lead to the development of specialized hardware, optimized to run these more efficient models.

AI Learning Hour 📚

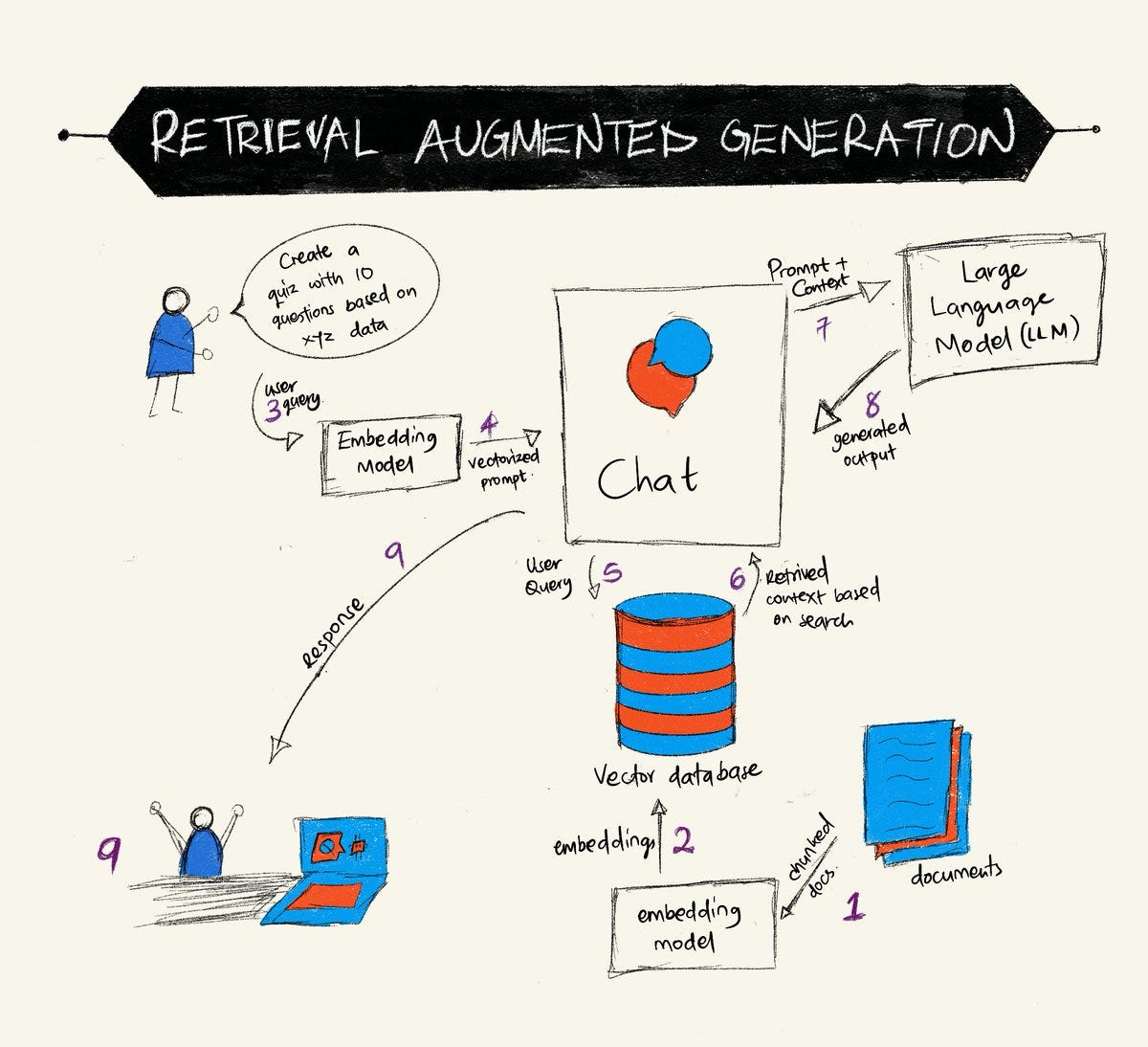

Retrieval-augmented generation (RAG) is a significant advancement in the generative AI field as it enhances the quality and relevance of language model outputs by incorporating external information from a vast corpus of data. Learn more about it along with a step-by-step tutorial: Click Here

-

LTX Studio: Developed by Lightricks, it is a cutting-edge, AI-driven video generation platform that revolutionizes storytelling by enabling users to seamlessly control every stage of video production, from concept to completion, using advanced 3D technology and language models. It features intuitive tools for editing, storyboarding, scene customization, and real-time adjustments, simplifying the creative process for filmmakers.

-

yourwAI: An AI-powered platform to match you with your ideal career path by analyzing your personality, skills, passions, and dreams. yourwAI offers personalized career path recommendations and actionable insights, available in both lite and in-depth reports, to help you discover your potential, at all stages of life.

-

Magicreply: Engage with your audience and grow your social media presence with AI-generated human-like replies with a single click. Works with X and LinkedIn.

-

Reducto’s Document API: Solves the challenges of document ingestion for LLM workflows by accurately processing complex PDF layouts, including text, tables, and images, and converting them into structured data. It improves the efficiency and quality of document processing, enhancing the performance of RAG pipelines in machine learning projects.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

>be like satya

> invests in openai, sells azure

> gives free gpt4 & makes Google dance

> invests in inflection ai (AI safety guy)

> partners up with 🤗, sells azure

> invests in slm (phi), sells azure

> hosts open llms & sells azure

> invests in anti-openai mistral > sells azure ~ 1LittleCoder -

2022:

– oh nooo!!! you can’t run language models on cpu! you need an expensive nvidia GPU and special CUDA kernels and–

– *one bulgarian alpha chad sits down and writes some c++ code to run LLMs on cpu*

– code works fine (don’t need a GPU), becomes llama.cpp

2023:

– oh noo!! you can’t train language models without a special reward model and RLHF! you need to be applying policy gradient and tuning hyperparameters–

– *a couple of stanford phd students sit down and work out the math* – math works fine (don’t need RL), becomes DPO

2024:

??? ~ jack morris

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![talk_sora.mp4 [optimize output image] talk_sora.mp4 [optimize output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2acad7e4-66c7-4f60-a5d1-64f0ee322f62_600x337.gif)

![ssstwitter.com_1709155860983.mp4 [optimize output image] ssstwitter.com_1709155860983.mp4 [optimize output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe67b11c0-99d4-4f58-b3cd-fd843f43aaff_800x450.gif)