Today’s top AI Highlights:

-

Apple releases the most comprehensive paper on its new multimodal LLMs

-

Clone Robotics uses human-like muscles for robots’ parts

-

Product listing on Amazon from product’s URL

-

xAI’s Grok-1 is not fine-tuned for any particular task

-

Transfer ANY image style to another image with Magnific AI

& so much more!

Read time: 3 mins

As Elon Musk announced last week, xAI has opensourced Grok-1 under Apache 2.0 license and here are all the details:

-

314B parameter Mixture of Experts(MoE) model

-

Utilizes 8 experts with 2 active, yielding 86B active parameters (Source)

-

Base model trained on a large amount of text data, not fine-tuned for any particular task

-

Trained from scratch by xAI using a custom training stack on top of JAX and Rust in October 2023

-

The implementation of the MoE layer is not efficient, chosen to avoid the need for custom kernels to validate the correctness of the model

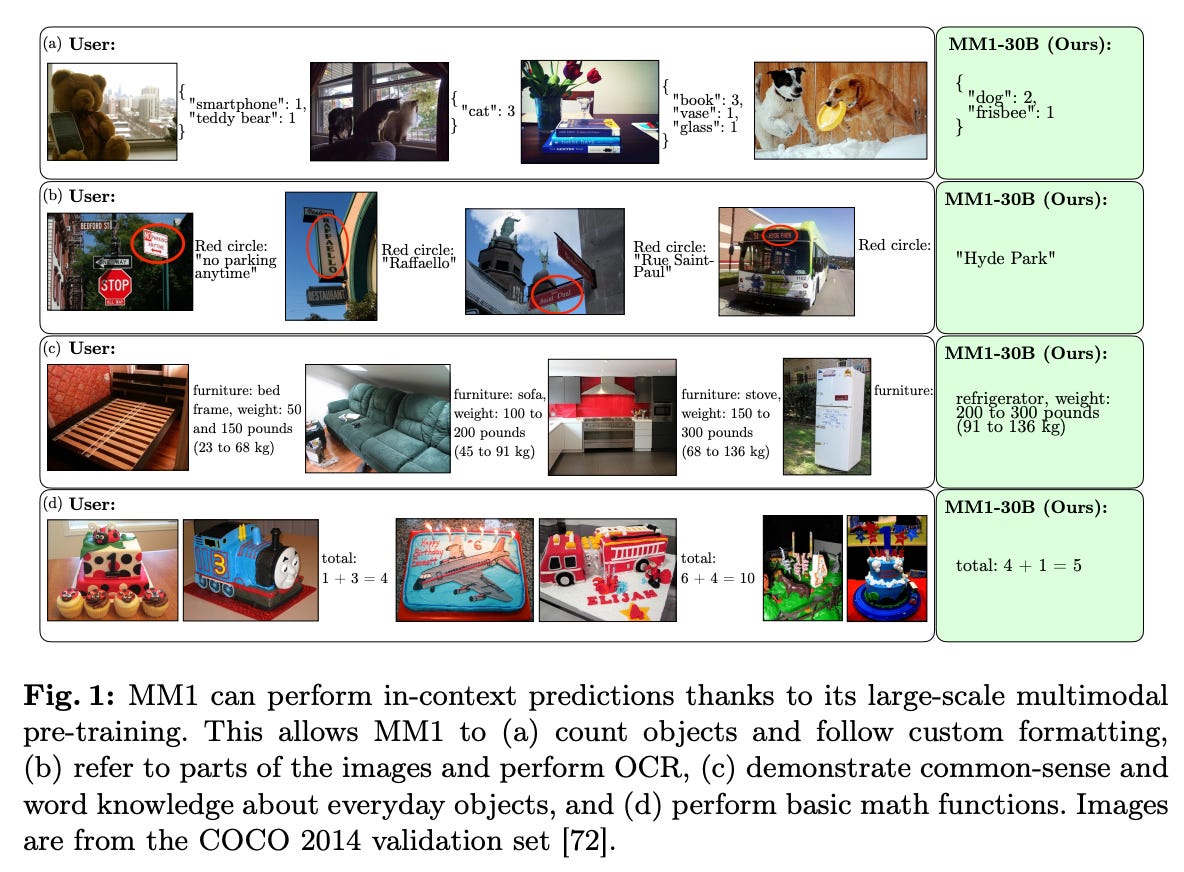

Apple has released MM-1a series of multimodal AI models that scale up to 30B parameters. The most interesting part of this release is how comprehensive the MM-1’s technical paper is, where the team has shared some insightful findings right from the pre-training to fine-tuning, architecture components, and data selection strategies. Here are some key findings and highlights of the paper:

-

The research emphasizes the importance of a diverse pre-training dataset, including image-caption, interleaved image-text, and text-only data, critical for excellent few-shot learning across benchmarks, enhancing the model’s versatility in understanding diverse information types.

-

The choice of image encoder, including factors like image resolution and token count, greatly affects the model’s performance. Surprisingly, the vision-language connector’s architecture plays a smaller role.

-

The MM-1 models have been scaled from 3B, 7B, to 30B parameters, and include both dense models and mixture-of-experts (MoE) variants. The study explores scaling the dense model by adding more experts in the FFN layers of the language model, highlighting MoE for further scaling without sacrificing inference speed.

-

A broad spectrum of datasets, ranging from instruction-response pairs to academic and text-only datasets, was employed for fine-tuning. Techniques to support high-resolution image inputs, such as positional embedding interpolation and sub-image decomposition, were vital for improved fine-tuning performance.

-

In various few-shot learning settings, MM1 models outperformed Emu2, Flamingo, and IDEFICS in tasks like captioning, multi-image reasoning, and visual QA.

Did you ever noticed how intricately our muscles and tendons moves when we actions like grabbing a cup of coffee or type out a message. This marvel of nature, blending strength and precision, has long been a source of inspiration and challenge for roboticists. Bridging this gap, Clone Roboticsa Poland-based startup born in November 2021, quietly released Clone Hands and Clone Torso that mimic a musculoskeletal system by employing hydraulic tendon muscles. The system aims to replicate the fluidity and versatility of human movements in robots.

Key Highlights:

-

The hydraulic tendon muscles system in robots uses tubes filled with liquid to mimic human muscles and tendons. By controlling the flow of liquid into these tubes, the robot can perform actions like grabbing objects, much like how our muscles work when we move our hands.

-

The Clone Hands match the size of characteristics of a human hands. Equipped with 37 muscles and 24 degrees of freedom, it can grasp and manipulate objects with near-human capability.

-

It weighs only 0.75kg and spans a total length of 22 inches, yet in a demo it could lift a weight of 41 lbs seamlessly. The Hand showcases an impressive blend of dexterity and strength while being cost-effective.

-

Clone Torso incorporates the same musculoskeletal technology, scaling the design from the hand to a complete torso with a rigid spine, actuated neck, and two fully actuated arms. It features a natural skeleton design and is equipped with a Raspberry Pi 4B in the skull for network connectivity and control via high-level software.

-

A compact, battery-powered hydraulic pump, comparable in volume to the human heart, pressurizes the artificial muscles and fits within the Torso.

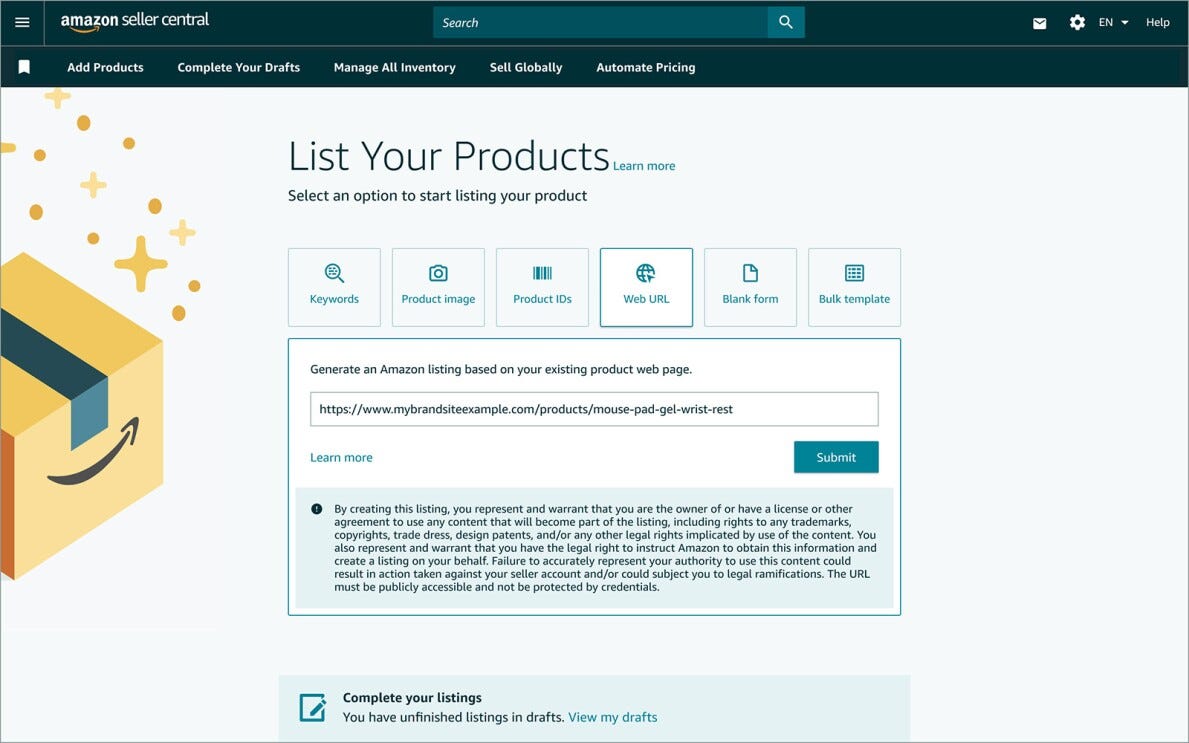

Amazon is leveraging generative AI to make product listing more and more easy for sellers. Initially, the company had launched a feature where the sellers can describe their product in a few words, which generative AI used to create product titles, descriptions, and details. This evolved to uploading an image of their product, with AI generating the product title, description, and attributes like color and keywords to enhance product discoverability.

Amazon is now launching a feature that allows sellers to leverage their existing listings from their own websites by providing a URL to Amazon. The AI then creates high-quality listings for Amazon’s store. Rolling out in the U.S., it’ll save sellers’ time and effort while enhancing listings to appeal to customers and drive sales.

-

Magnificent AI’s Style Transfer: Apply the visual style of one image to another and transform photos into storyboards or alter them with different artistic aesthetics. Transfer ANY style to a given image using a reference image and a text prompt.

-

Langmagic: Learn any language with native content. It leverages native YouTube videos as the primary content source to make you immerse yourself in real-life contexts and authentic language use. By integrating ChatGPT, it enhances the learning experience with interactive features like in-context explanations, prompts, and a dictionary.

-

MindGraph: A proof of concept, open-source project for creating and querying large knowledge graphs using AI. It’s designed as an API-first, graph-based system for natural language interactions, serving as a template for building customizable CRM solutions with a focus on ease of integration and extendibility.

-

Exa: Retrieves the best content on the web using embeddings-based search, surpassing traditional keyword-based methods by understanding the meaning behind queries. It enables developers to integrate a powerful web search capability into their applications, with features like instant page content retrieval, flexible query handling, customizable search parameters, and similarity search.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

A friend of mine suggested that I clarify the nature of the danger of woke AI, especially forced diversity.

If an AI is programmed to push for diversity at all costs, as Google Gemini was, then it will do whatever it can to cause that outcome, potentially even killing people. ~

Elon Musk -

Gemini, Gemini 1.5, Mixtral and Claude have all tried to beat GPT-4

No one has succeeded except tor Claude, which inches it out.

I suspect OpenAI themselves can’t bet GPT-4 significantly.

It becomes harder and harder to squeeze performance from ML models anyways, so this is to be expected

TLDR; GPT-5 may not be such a big deal ~

Bindu Reddy

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![rotate.mp4 [video-to-gif output image] rotate.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F8cd27a6b-3afb-4a3a-b460-7bdec647f73f_600x600.gif)

![ssstwitter.com_1710731266631.mp4 [video-to-gif output image] ssstwitter.com_1710731266631.mp4 [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F47090ee0-9188-4093-82b9-7893df6f3018_600x367.gif)