Today’s top AI Highlights:

-

Turn any image into video games with Google DeepMind’s Genie

-

Mistral Large – the world’s second-ranked model after GPT-4

-

DeepGram’s single API for multiple audio capabilities

-

New code assistant wins over GitHub Copilot on speed and context

& so much more!

Read time: 3 mins

Imagine transforming any image – a sketch, a photograph, or even a text-generated scene into a fully interactive, playable world. That’s exactly what Geniedoes. Genie by Google DeepMind is a foundation world model that can generate endless interactive action-controllable 2D worlds using just about any image you give it. This tool, developed by learning from a huge number of internet videos without needing specific instructions, can turn drawings, photos, and digital images into interactive environments.

Key Highlights:

-

One of the coolest things about Genie is how it learns to figure out which parts of an image can be moved or interacted with, and what kind of actions can happen, just from watching videos. It doesn’t need the videos to be labeled with specific actions. It can take any new image it hasn’t seen before and apply what it’s learned to make those parts of the image interactive.

-

Genie is changing the game by letting anyone create a whole interactive world from a single image. Whether you’re using a cutting-edge tool to generate an image from text or just sketching something on paper, Genie can bring it to life.

-

Beyond just creating fun environments, Genie is also important for making smarter AI systems. Since it can generate endless types of new worlds, AI systems can learn to navigate and interact in many more scenarios than before. Genie has already shown that the actions these AI systems learn in its virtual worlds can help them in environments made by humans.

Mistral AI has released its most advanced model Mistral Largewhich reaches top-tier reasoning capabilities and boasts advanced multilingual reasoning tasks, including text understanding, transformation, and code generation. It is the world’s second-ranked model generally available through an API, next to GPT-4.

Key Highlights:

-

The new model comes with a 32k context window, advanced instruction-following, and native function calling capability for complex interactions with internal code, APIs, or databases.

-

The model boasts fluency in English, French, Spanish, German, and Italian. Its strong performance in multilingual benchmarks outperforms competitors like Llama 2 70B, particularly in non-English languages.

-

In direct comparisons, Mistral Large showcases top-tier performance across a range of benchmarks, including MMLU (81.2%) and HellaSwag, positioning it as a highly competitive option next to leading models such as GPT-4, outperforming Claude 2 and Gemini Pro. It also stands very competitive in math and coding tasks.

-

Alongside Mistral Large, the release of Mistral Small targets applications requiring low latency and cost efficiency. Mistral Small outperforms the Mixtral 8x7B model, offering a refined solution that balances performance with operational demands.

-

Mistral Large is priced at $0.008 per 1k tokens (inputs) and $0.024 per 1k tokens (outputs), which when compared to GPT-4-32k pricing of $0.06 per 1k tokens (input) and $0.12 per 1k tokens (output) is significantly less for the performance it delivers.

-

Microsoft and Mistral AI have entered into a multi-year partnership making Mistral Large available first on Azure. Mistral Large is available through la Plateforme.

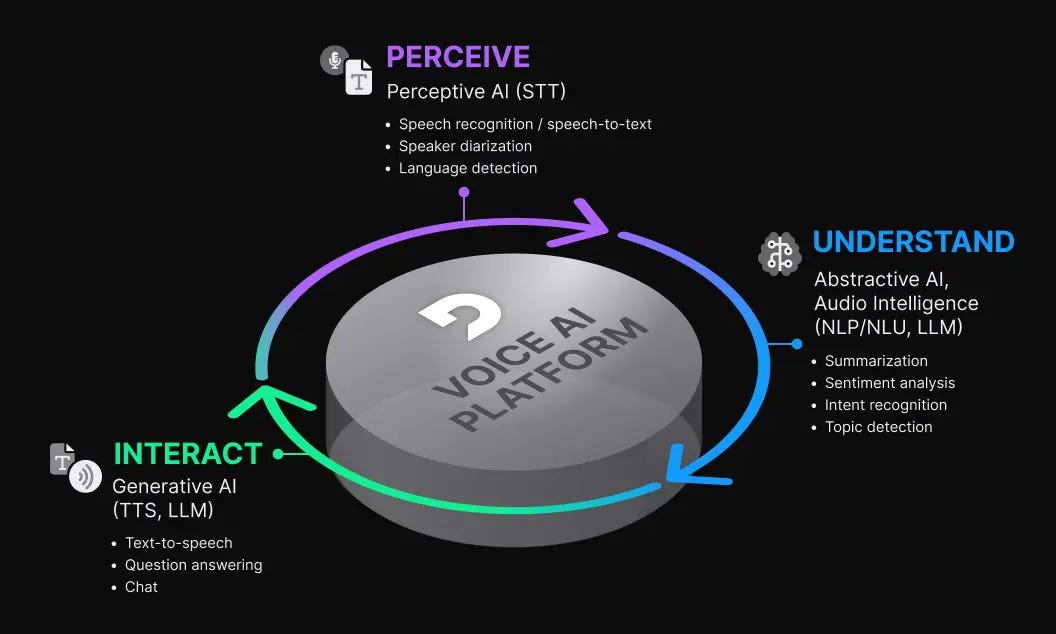

DeepGram’s Voice AI platform just received a noteworthy update with their new audio intelligence models focused on enhancing speech analytics and understanding in conversational interactions. The platform now combines leading speech-to-text services with advanced speech analytics and conversational AI technologies, addressing the full spectrum of conversational interaction phases—Perceive, Understand, and Interact—through a single API.

Key Highlights:

-

Utilizing task-specific language models (TSLMs), Deepgram’s new offerings are not only faster but also more accurate than larger, general-purpose LLMs from OpenAI and Google, specifically tuned for high-throughput, low-latency applications.

-

The models are trained on more than 60K high-quality conversations for contextual accuracy and optimized for real-time applications for businesses to extract insights from speech effectively and enhance decision-making.

-

Customers can access the following audio intelligence capabilities through the same API used for transcription:

-

Summarization: Captures the essence of conversations, including contextual information.

-

Sentiment Analysis: Identifies sentiment with a confidence score, tracking sentiment shifts throughout the interaction.

-

Intent Recognition: Recognizes speaker intent with text segments, intent labels, and confidence scores.

-

Topic Detection: Identifies key topics with text segments, topics, and confidence scores for each segment.

-

-

Supermaven: A cutting-edge code completion tool with an unprecedented 300k context window, allowing it to understand and integrate a developer’s codebase comprehensively. It also boasts superior performance with a latency of only 250 ms, significantly faster than all its competitors, and has been designed to process the sequence of edits in a codebase, offering unique insights for tasks like refactoring.

-

DryMerge: Automate workflows using plain English instructions. It integrates with various applications such as Slack, Gmail, Google Sheets, and Salesforce, simplifying task automation across tools by breaking down the requested automation into simple steps and responding to events as needed.

-

Octomind: Give a URL, and with the power of AI, find bugs before your users do, streamlining the testing process and integration into CI/CD pipelines without requiring access to the codebase.

-

Humaan.ai: Easily incorporate human-like intelligence into apps, offering capabilities in language, vision, speech, and sound through just a few lines of code. It provides access to state-of-the-art models and an intuitive API for rapid integration.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

Every year, the big platforms have been getting worse and the alternatives have been getting better. Those lines cross eventually.

This is the decade big tech ends. ~ Cold George -

It took a startup less than a year and just a hundred million dollars to train a model close to GPT-4 performance

This will only get 10x cheaper and easier in the next year! ~ Bindu Reddy

That’s all for today!

See you tomorrow with more such AI-filled content. Don’t forget to subscribe and give your feedback below 👇

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![Screen Recording 2024-02-26 at 10.27.22 PM.mov [video-to-gif output image] Screen Recording 2024-02-26 at 10.27.22 PM.mov [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fd992d603-d05a-480a-85c2-937980db95cf_800x302.gif)