Today’s top AI Highlights:

-

Nvidia brings AI game characters to life

-

GitHub rolls out tool for code scanning and autofixing

-

Google faces hefty fine for using news publishers’ data to train Gemini

-

The largest public domain dataset for training LLMs

-

Create custom end-to-end websites with just a prompt

& so much more!

Read time: 3 mins

Nvidia took the spotlight at the Game Developers Conference with its digital human technology to create lifelike avatars for games. This toolkit is breathing life into video game characters, giving them voices, animations, and the ability to whip up dialogue on the fly. A highlight of the conference was a glimpse into Covert Protocol, a tech demo that puts Nvidia’s tools to the test. This demo offers a peek into a world where Non-Player Characters (NPCs) can tailor their responses to player interactions, creating a fresh experience each time the game is played.

Key Highlights:

-

The suite of tools includes Nvidia ACE for animating speech, Nvidia NeMo for processing and understanding language, and Nvidia RTX for rendering graphics with realistic lighting effects. Together, these tools create digital characters that can interact with users through AI-powered natural language that mimics real human behavior.

-

Nvidia and Inworld AI collaborated to develop Covert Protocol, a tech demo to showcase the capabilities of their tech. In the demo, you step into the shoes of a detective, navigating the game through conversations with NPCs that are anything but ordinary. Every playthrough brings a unique twist due to the real-time interactions that influence the unfolding narrative.

This technology allows for a high degree of player agency and narrative flexibility, making each playthrough unique.

-

That’s not just it. Nvidia’s technology is being used for multi-language games as well. Games like World of Jade Dynasty and Unawake are incorporating Nvidia’s Audio2Face technology to generate realistic facial animations and lip-syncing in both Chinese and English. Audio2Face automates matching the characters’ facial movements to spoken dialogue, reducing the manual animation work.

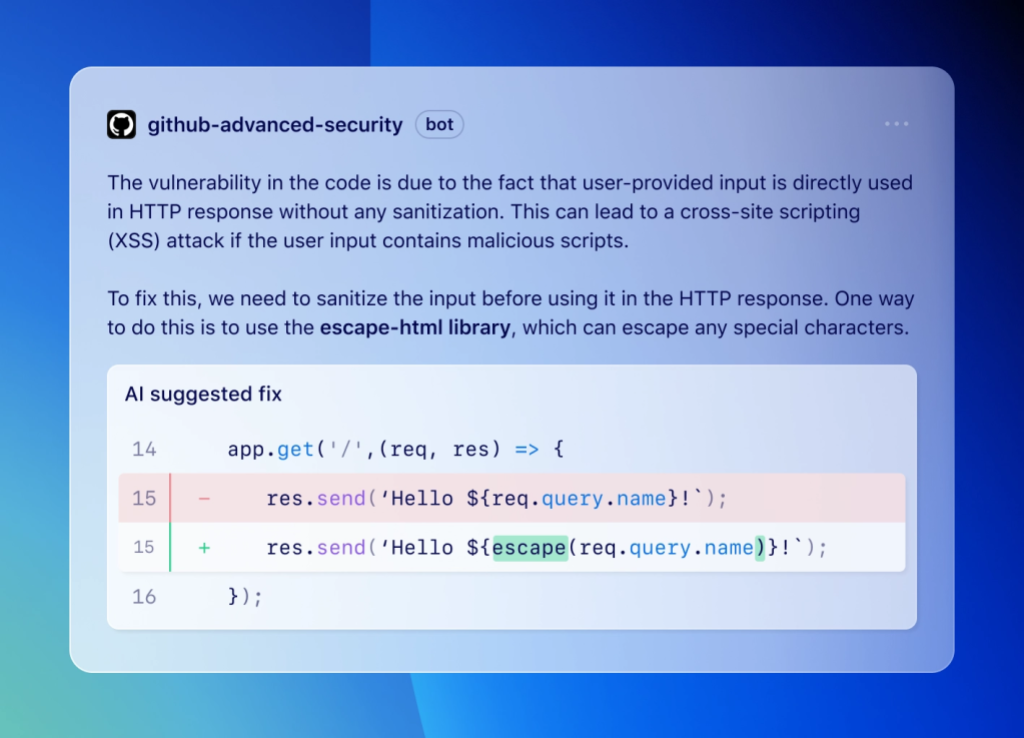

GitHub is rolling out access to the new code-scanning autofix feature in public beta for all GitHub Advanced Security customers. Powered by GitHub’s Copilot and CodeQL, the feature covers more than 90% of alert types in JavaScript, TypeScript, Java, and Python. It is capable of remediating more than two-thirds of the vulnerabilities found with minimal or no editing required by developers.

Key Highlights:

-

GitHub CodeQL looks for patterns that match known vulnerabilities in the codebase. It doesn’t rely on developers to identify these vulnerabilities manually. Instead, it scans the code automatically as part of its analysis process.

-

For each vulnerability found, code scanning autofix leverages Copilot to not only suggest code for remediation but also provide an explanation in natural language. This helps developers understand the context and rationale behind each suggested fix, making it easier to decide whether to accept, edit, or dismiss the suggestion.

-

These suggestions may include changes to multiple files and recommend additions to project dependencies.

-

The tool has helped teams remediate vulnerabilities 7x faster than traditional security tools, enhancing the overall developer experience.

Big tech companies are struggling with copyright lawsuits left, right and center, from artists and news publishers for illegally using their work to train generative AI models. The latest (but not new) victim is Googlesnagged again by a hefty fine €250 million (around $270 million) by France’s competition authority for unauthorized use of copyrighted news snippets to train Gemini.

This situation has unfolded against the backdrop of a broader debate over digital copyright protections in Europe, initiated by the EU’s digital copyright reform in 2019. Since then, Google’s journey through this legal landscape has been tumultuous with attempts to block Google Ads in France, a heavy penalty in 2021, and repeated negotiations with French news publishers.

It’s very clear how the companies are scared to come out on the data they have used for training their models. Remember Mira Murati’s, OpenAI’s CTO, interview with Wall Street Journal last week?

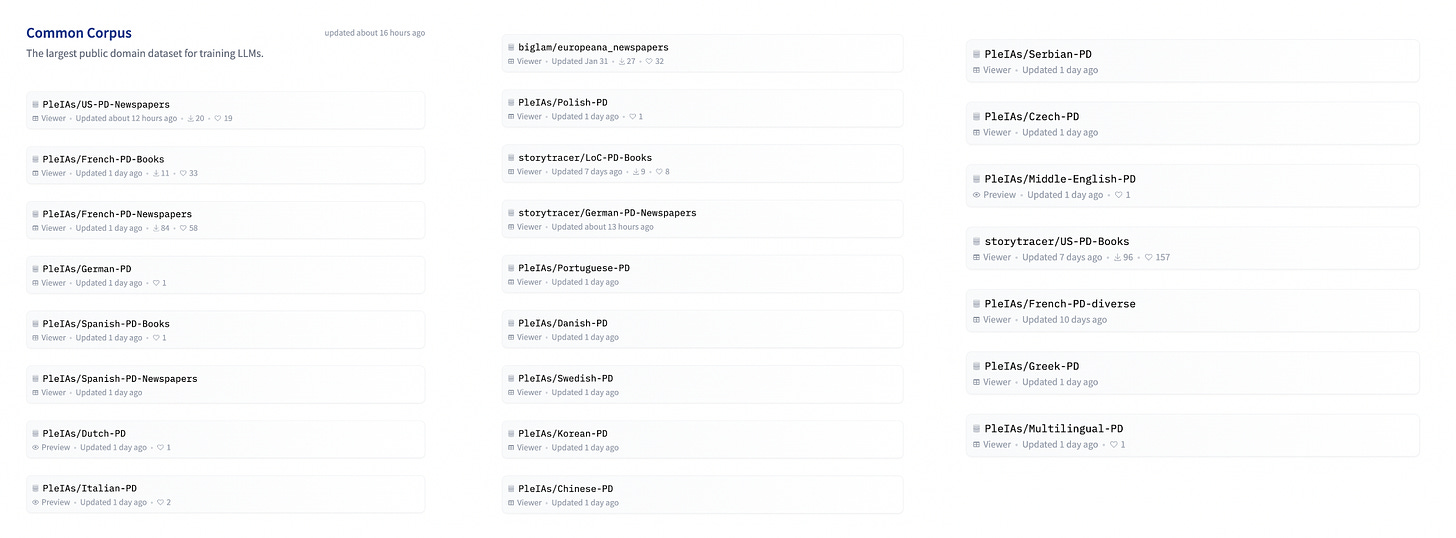

So, is it not possible to train high-quality LLMs without tipping copyright laws? It seems that’s not exactly the case. Common Body has been released as the largest public domain dataset for training LLMs, aiming to exactly counter this claim. The dataset is an international effort led by Pleiasa French start-up that focuses on training LLMs, along with organizations advocating for open science in AI such as HuggingFace, Occiglot, Eleuther, and Nomic AI. The initiative is also backed by the French Ministry of Culture and the Direction.

Key Highlights:

-

Common Corpus encompasses 500 billion words, making it an unparalleled resource in terms of scale and scope. This dataset is not only the largest of its kind in English, with 180 billion words, but also offers extensive support for French, Dutch, Spanish, German, and Italian, along with a significant representation of low-resource languages.

-

Unlike many other sources that rely on web archives, Common Corpus includes millions of books and digitized newspapers, such as the 21 million items from Chronicling America, and extensive monograph datasets collected by digital historian Sebastian Majstorovic.

-

While already substantial, the corpus is a work in progress, it will continually augment the collection with additional datasets from open sources.

-

gpt-prompt-engineer: Optimizes the process of prompt engineering by automatically generating, testing, and ranking various prompts to discover the most effective ones for a given task. It uses GPT-4, GPT-3.5-Turbo, and Claude 3 Opus, with capabilities like auto-generating test cases and handling multiple input variables.

-

Dora AI: Create end-to-end custom websites with just simple text prompt. Start from a single prompt, and generate the site’s subject, style, images, and copy within a responsive layout. It does not relying on predefined templates or layouts, it truly provides custom site designs tailored to individual prompts.

-

Voice by Character AI: A suite of features to make your Characters speak to you in 1:1 chats, enhancing interaction through a multimodal interface. You can create your own Voice by uploading an audio sample or even choose from the Voice library. It is now available for free to all users.

-

Sumatra AI: The only experimentation solution specifically built for Framer. It allows A/B testing without disrupting website layouts or designs. It is designed to be fast, scalable, and compatible to easily create and share test previews, and automatically applies best practices for analyzing experimental results.

😍 Enjoying so far, TWEET NOW to share with your friends!

-

Fine, if OAI won’t be releasing GPT-5 for “months,” then how about they open-source 3.5?

I mean, Claude Haiku already outperforms 3.5 and is cheaper anyways. 🤷♀️ ~

Bindu Reddy -

Google is delighted that Mustafa Suleyman is now Microsoft’s problem. ~ Pedro Domingos

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![ssstwitter.com_1710943886759.mp4 [optimize output image] ssstwitter.com_1710943886759.mp4 [optimize output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Fe96fd490-2a96-4bd7-a213-fa6a331e6bad_600x338.gif)