Today’s top AI Highlights:

-

xAI releases Grok-1.5 beating Mistral Large and Claude 3 Sonnet

-

AI21 Labs releases a new model combining Transformer and Mamba

-

Robot training inspired by next token prediction in language models

-

Lip-sync API to match the lip movement in any video to audio in any language

-

Transform your smartphone into a desktop robot

& so much more!

Read time: 3 mins

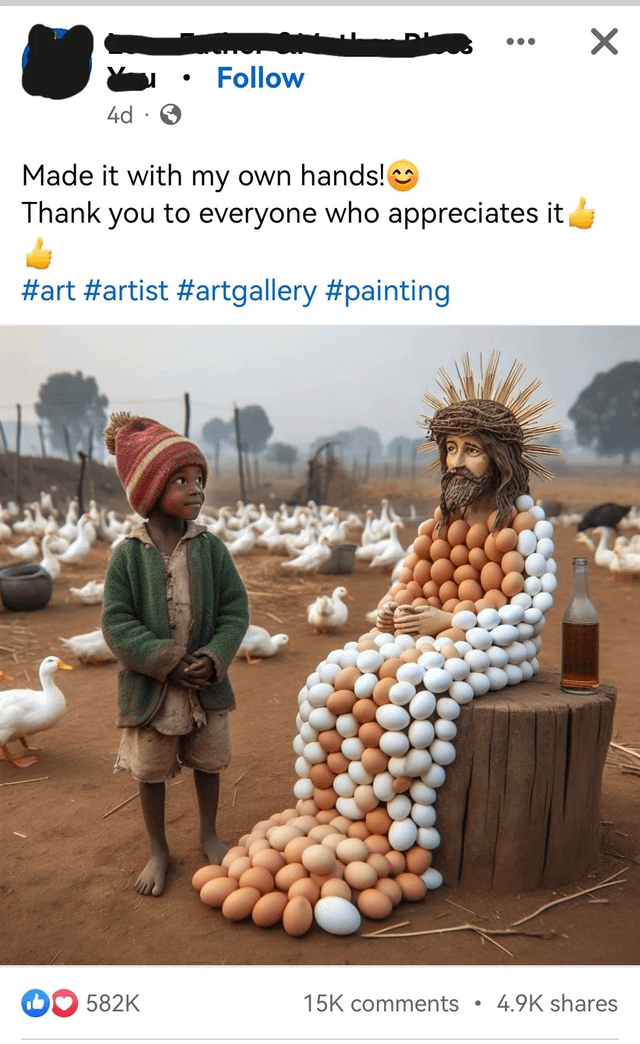

Transform your code exploration with efficient, relevant snippet searches in less than an hour using Qdrant’s vector similarity search. Let’s look at how you can build a semantic search for your codebase in just a few simple steps:

-

Data Preparation: Simplifying code into manageable segments for efficient processing.

-

Parsing the Codebase: Employing Language Server Protocol tools for versatile parsing across programming languages.

-

Converting Code to Natural Language: Making code snippets compatible with general-purpose models.

-

Building the Ingestion Pipeline: Insights into data vectorization and semantic search setup.

-

Querying the Codebase: Utilizing models to pinpoint relevant code snippets, showcasing Qdrant’s adaptability in semantic searches.

Two weeks after opensourcing Grok, xAI has released the next iteration, Grok 1.5. It demonstrates a significant improvement in tasks like language understanding, math, and coding tasks from its predecessor. It also introduces an expansive context capability of up to 128K tokens, 16x more than the previous one. Grok 1.5 will soon be available to all X Premium users.

Key Highlights:

-

Performance: Achieving an 81.3% score on the MMLU benchmark, Grok 1.5 outperforms competitors such as Mistral Large, Claude 2, and Claude 3 Sonnet. Its enhanced capabilities in math and coding tasks also allow it to stand toe-to-toe with leading models like GPT-4, Gemini Pro, and Claude 3 Opus.

-

Long Context: It can handle longer and more complex prompts, while still maintaining its instruction-following capability as its context window expands. In the Needle In A Haystack evaluation, Grok-1.5 demonstrated powerful retrieval capabilities for embedded text within contexts of up to 128K tokens in length.

-

Infrastructure: The infrastructure supporting Grok-1.5 leverages a custom distributed training framework that integrates JAX, Rust, and Kubernetes. This ensures streamlined prototyping and the training of new models at scale, addressing major challenges such as reliability and uptime in training LLMs.

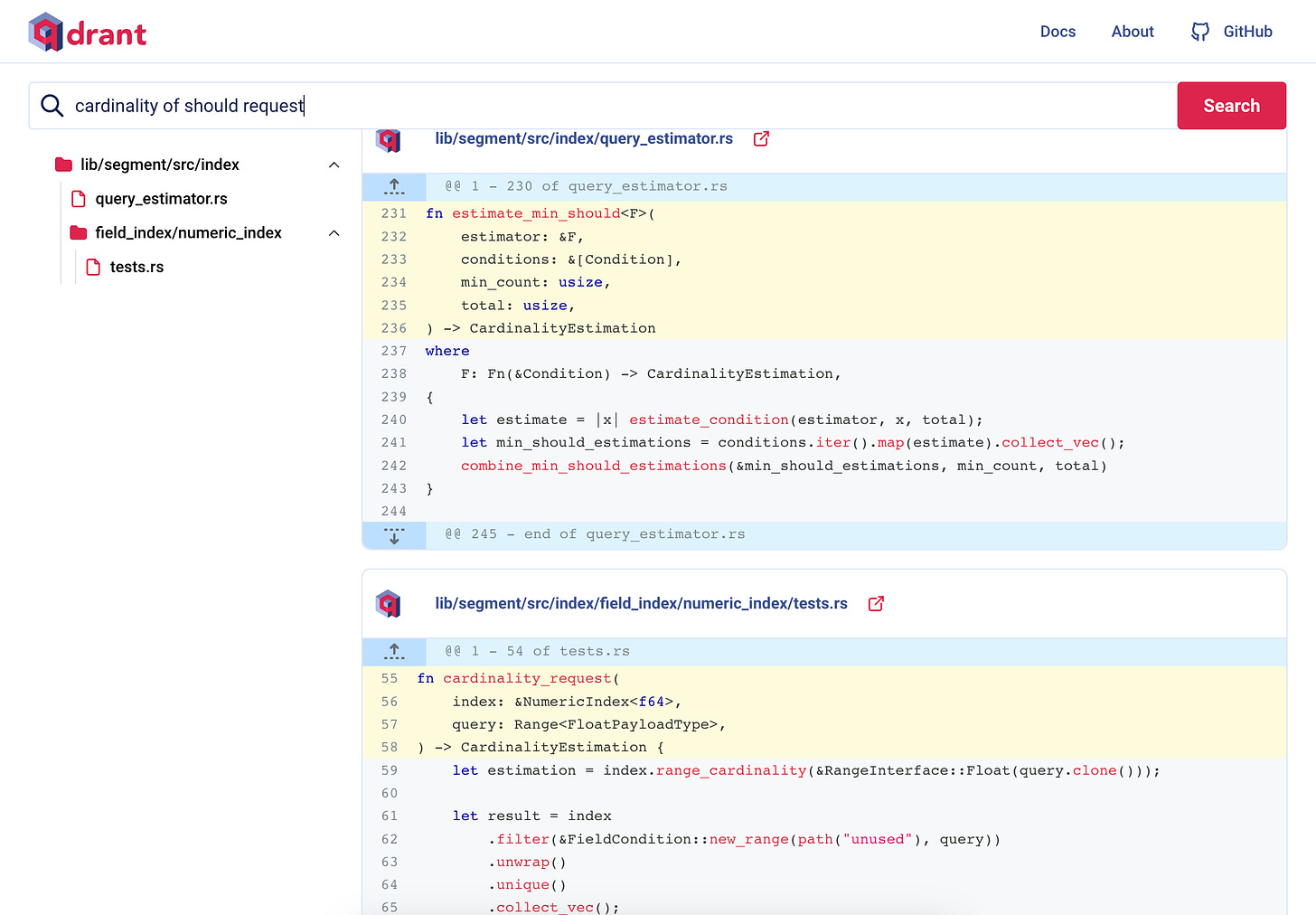

AI21 Labs has released Jambathe world’s first production-grade Mamba-based model. It combines the best aspects of Mamba Structured State Space (SSM) models and traditional Transformer technology to tackle the inherent limitations faced by both architectures on their own. With a humongous 256K context window and an architecture designed for efficiency, Jamba not only addresses some of the pressing challenges in AI model development but also opens up new avenues for application and research.

Key Highlights:

-

Limitations of SSM and Transformer Models: Transformer models, while powerful, suffer from a large memory footprint and slow inference as context lengthens, making them less practical for long-context tasks. While SSM models such as Mamba offer solutions to these issues but struggle with maintaining output quality over long contexts due to their inability to attend to the entire context.

-

Architecture: Jamba has a hybrid structure combining Transformer, Mamba, and mixture-of-experts (MoE) layers. Jamba operates with just 12B of its 52B parameters active at inference, making these parameters more efficient than those of a Transformer-only model of equivalent size.

-

Cost: It features a massive 256K context window, surpassing the capabilities of many existing models. Furthermore, it uniquely fits up to 140K context on a single GPU, democratizing access to high-quality AI tools.

-

Efficiency: Jamba delivers a 3x increase in throughput on long contexts compared to Mixtra 8x7B, while delivering competitive performance on benchmarks.

Transformer models are at the heart of many applications that involve language processing, showing an amazing ability to understand and generate text. Building on this success, researchers at UC Berkeley have applied the concept of predicting the next piece of information to teach humanoid robots how to move, much like how transformers work with words. This method treats robot movements as a series of predictions, similar to how transformer models predict the next word in a sentence, offering a new way to train robots to handle real-world tasks.

Key Highlights:

-

Robot Training Through Prediction: Using a causal transformer model, the approach predicts a robot’s next move based on past actions. This method adapts to the varied types of data in robotics, like sensory inputs and motor actions, by predicting them in sequence. It even works with incomplete data, for example, videos that don’t show every action, by filling in the gaps as if they were there.

-

Diverse Dataset: The training data for the model includes a wide range of sources: simulations from neural network policies, commands from model-based controllers, human motion capture data, and videos of people from YouTube. This diversity helps the robot learn to move in more ways and in different settings.

-

Testing in Real World: Tested on the streets of San Francisco, the model allowed a humanoid robot to walk on various surfaces without having been specifically trained for those conditions beforehand. Even with only 27 hours of walking data to learn from, the robot could generalize to commands not seen during training, like walking backward.

😍 Enjoying so far, share with your friends!

👀 This weekend in “AI Deep Dive”

Want to know how AI wearable devices are becoming a part of our daily routine?

Dive deep with us to take a closer look at the latest AI wearables, their features, their importance in personalizing our experience, and what the future holds for us with this developing technology!

-

Structure Reference in Adobe Firefly: Use an existing image as a structural reference template and generate multiple image variations with the same layout. You can also combine Structure Reference with Adobe’s Style Reference to refer to both the structure and style of an image and quickly bring your ideas to life.

-

IvyCheck: Offers an API that classifies user and AI-generated text, and identifies sensitive data to handle it appropriately. It provides real-time checks to protect against risks such as prompt injection, data leaks, and inaccurate chatbot responses, ensuring AI applications are safer and more reliable.

-

Tanning Robot: Transform your smartphone into a desktop robot! It uses your smartphone to see, hear and talk. Engage in natural conversations, enjoy custom actions and reactions for a range of emotions, dive into motion-sensing games, use it for object detection, and even capture your precious moments in no time with its auto-snap.

-

Sync.: Animate people to speak any language in any video. Sync.’s AI lip-sync model can match audio in any language to a person’s lips in any video, keeping the expressions and movements consistent.

-

The realization that the ideas spontaneously crossing your mind at night could be turned into beautiful visuals, melodies, and videos with just a few words typed into a prompt box can really complicate the process of falling asleep ~

Nick St. Pierre -

There’s never been a better time to build in AI:

– Dirt cheap, smart models (Haiku is nearly free)

– 1m context (Gemini)

– Faster than ever with ~0 latency (Groq)

-Intelligence increasing exponentially (Opus, gpt4)

We’re only 3 months in. Imagine the next 9 months ~

Sully

That’s all for today! See you tomorrow with more such AI-filled content.

⚡️ Follow me on Twitter @Saboo_Shubham for lightning-fast AI updates and never miss what’s trending!

PS: I curate this AI newsletter every day for FREE, your support is what keeps me going. If you find value in what you read, share it with your friends by clicking the share button below!

![Screen Recording 2024-03-28 at 5.29.35 PM.mov [video-to-gif output image] Screen Recording 2024-03-28 at 5.29.35 PM.mov [video-to-gif output image]](https://substackcdn.com/image/fetch/w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Ff1436660-2690-4b7a-90dd-deb6539b5bc4_800x482.gif)